Deepseek-R1 is an open model with a state-of-the-art inference function. Instead of providing a direct response, the inference model, such as Deepseek-R1, performs multiple inference paths in queries, implements thinking, consensus, and search methods to generate the best answer.

It is known as test time scaling to use this series of inference paths to reach the best answer using the reason. The Deepseek-R1 is a perfect example of this scaling method, indicating why acceleration computing is important for agent AI reasoning.

The model can be repeatedly “considered” through problems, so the quality of the model will continue to expand to create more output tokens and longer power generation cycles. An important test time calculation is important to enable both real-time inference and high-quality response from inference models such as Deepseek-R1.

R1 provides high -reasoning efficiency while providing the main accuracy of tasks that require logical inference, reasoning, mathematics, coding, and language understanding.

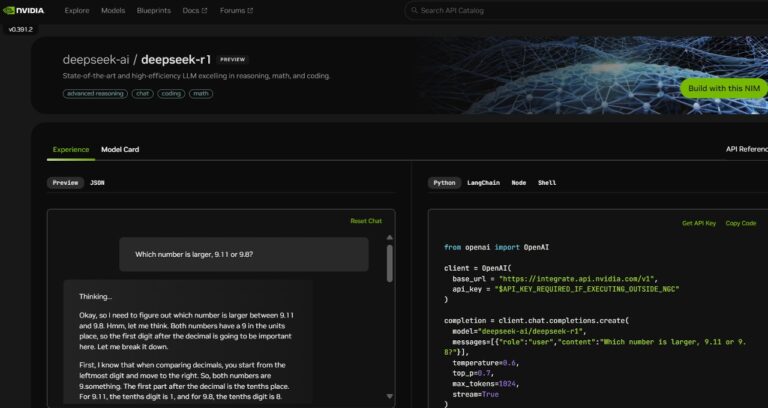

To support the developers experimenting with these functions safely and supporting their own specialized agents, the 67.1 billion parameter Deepseek-R1 model will be available as NVIDIA NIM MICROSERVICE previews on Build.nvidia.com. Ta. Deepseek-R1 Nim Microservice allows token per 3,872 tokens with a single NVIDIA HGX H200 system.

Developers can test and experiment with application programming interfaces (APIs), which are expected to be available as a downloadable NIM micro service, part of the NVIDIA AI Enterprise Software platform.

Deepseek-R1 Nim Microservice supports the industry standard API and simplifies the development. Companies can maximize security and data privacy by executing NIM micro services with their favorite acceleration computing infrastructure. Using NVIDIA AI Foundry to use NVIDIA NEMO software, Enterprises can also create a customized Deepseek-R1 NIM microservice for special AI agents.

Deepseek -R1 -Perfect example of test time scaling

Deepseek-R1 is a large mixture (MOE) model. It incorporates an impressive 671 billion parameters (10 times more than many other popular open source LLMs). This model uses extreme number of experts for each layer. There are 256 experts in each layer of R1, and each token is routed to eight independent experts in parallel for evaluation.

In order to provide real -time answers to R1, many GPUs with high computing performance are required, and in relation to high -band width and low -delay communication, promotes for all experts for inference. You need to route token. NVIDIA NIM Microserviceで利用可能なソフトウェアの最適化と組み合わせることで、NVLinkとNVLinkスイッチを使用して8つのH200 GPUを接続した単一サーバーは、最大3,872トークンあたり最大3,872トークンで完全な671億パラメーターDeepSeek -R1 model can be executed. This throughput is possible by using NVIDIA HOPPER ARCHITECTURE’s FP8 Trans engine and the MoE Expert Communication of NVLINK bandwidth for all layers.

It is important for real -time inference to obtain all floating point operation from the GPU to perform all floating points per second (flop). Next-generation NVIDIA BLACKWELL architecture provides test-time scaling for inference models such as Deepseek-R1, and provides a huge boost with five generations of tensol core. For inference.

Let’s get started now from Deepseek-R1 Nim Microservice

Developers can experience DeepSeek-R1 Nim Microservice. This is now available on Build.nvidia.com. See how it works:

With NVIDIA NIM, companies can easily deploy Deepseek-R1 and enhance the efficiency required for the agent AI system.

See notifications about software product information.