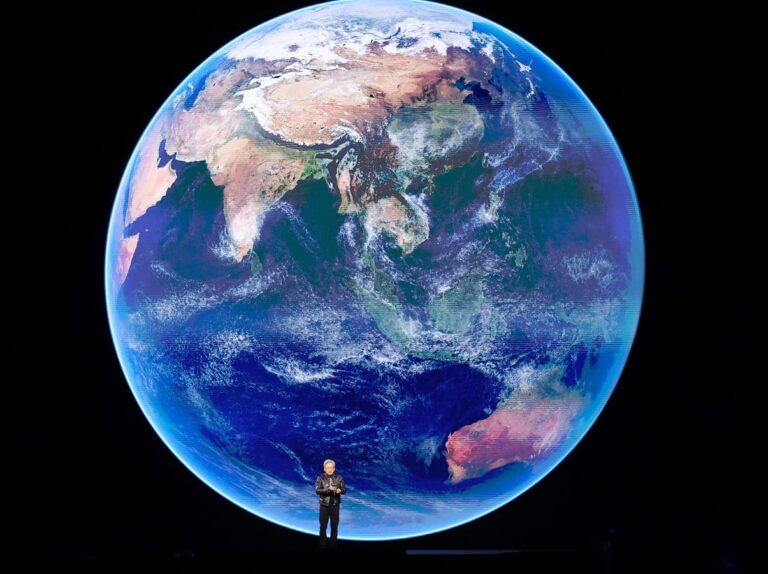

San Jose, CA – March 18: Nvidia CEO Jensen Huang will deliver the keynote speech. … (+)

Getty Images

As just a small part of what was announced at this year’s CES earlier this month, Nvidia announced the development of something called Nvidia Cosmos.

The name itself doesn’t say much, but it evokes something broader: the sky, the cosmology we humans tell ourselves to explain the origin of everything.

So what is this system?

Nvidia defines Cosmos as a “state-of-the-art generative world-based model platform,” and describes the world-based model as “a neural system that simulates real-world environments and predicts accurate outcomes based on text, image, and video input. defined as “network”.

The world model “understands” real-world physics, a spokesperson said. These support the development of robotic systems, self-driving cars, and other physical structures that can follow road rules and workspace requirements. In a sense, these are the engines for the emergence of physical entities that think, reason, move, and ultimately live like humans.

Technical glossary

Nvidia representatives also detailed other aspects of Nvidia Cosmos, including “an advanced tokenizer that helps split high-level data into usable parts.”

For reference, ChatGPT describes advanced tokenizers as follows: “Advanced tokenizers go beyond simple white space or rule-based segmentation to produce subword, byte-level, or hybrid segments that better handle rare words, multilingual text, and domain-specific vocabularies. These “smart” tokenizers are an important foundation of modern NLP systems, allowing models to scale to large datasets and diverse linguistic inputs. ”

These models will be made available under open licenses to help developers work on what they are creating. As a January Nvidia press release explains:

“Physical AI models are expensive to develop and require vast amounts of real-world data and testing. Cosmos World Foundation Models (WFMs) provide developers with photorealistic provides an easy way to generate large amounts of physically based synthetic data.

While concerns about jailbreaks and hacks are understandable, companies will be excited to have this opportunity to build on what a major US technology company has created.

Next is the process of data curation, and Nvidia NeMo provides an “accelerated” process.

Anyway, TLDR: These are “physics-aware” systems. These seem like important applications where AI “walks among us” and impacts our lives, rather than being siled somewhere on a computer. What will our robot friend look like? And how do we treat them, and them? These are the kinds of questions we have to think about as a society.

Nvidia Cosmos: Case study

When I read the list of companies that have already adopted Nvidia Cosmos technology, most of them were unfamiliar. However, one thing that caught my attention was this:

Ride-sharing company Uber was an early adopter of this type of physical AI.

“Generative AI is driving the future of mobility and requires both rich data and extremely powerful computing,” Uber CEO Dara Khosrowshahi said in a press statement. “We believe that by working with NVIDIA, we can significantly accelerate the industry’s timeline for safe, scalable autonomous driving solutions.”

The phrase “safe and scalable self-driving” probably describes this project well, but as with self-driving car design over the past 20 years or so, the devil is in the details.

There’s no further information on what Uber is doing with Nvidia Cosmos. But it gives you a better understanding of the framework itself and the context of what Nvidia is doing as a leading innovator in these types of systems.

Omniverse

I also read an article where the company describes the Nvidia Omniverse platform:

“A platform of APIs, SDKs, and services that enables developers to integrate OpenUSD, NVIDIA RTX™ rendering technology, and generative physics AI into existing software tools and simulation workflows for industrial and robotics use cases.”

In short, the Omniverse platform seems to be about evaluation, monitoring, and tooling to help explore what is possible in the world-based model itself.

inflection point

I would like to close with the following words from CEO Jensen Huang: “The ChatGPT moment for robots is here,” he reportedly said with enthusiasm.

That’s probably the headline here. Because we’re all wondering when we’ll start seeing these smart, physics-aware robots walking among us or powering truly autonomous vehicles. Because I think so.

The answer seems to be that it will be sooner rather than later.