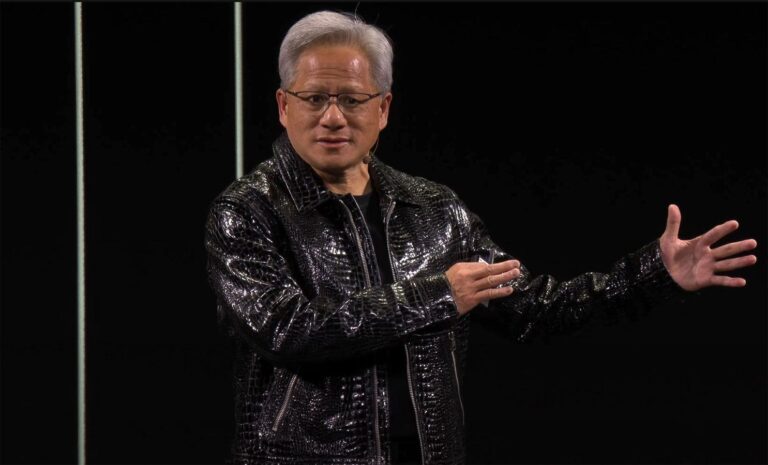

Nvidia CEO Jensen Huang delivers keynote speech at CES 2025 in Las Vegas, Nevada

Nvidia

In perhaps the most well-attended keynote in CES history, Nvidia CEO Jensen Huang took to the stage at a packed Michelob Ultra Arena to hear about a dizzying array of new technology announcements from consumer devices, including the new GeForce RTX 50 series. It was done. gaming graphics cards, a new secure self-driving vehicle platform called Thor based on the company’s latest Blackwell GPU technology, and more. But Nvidia’s new generative AI technology called Cosmos, which some people may have ignored due to its complexity, was the other star of the show in my opinion. Suffice it to say, if Cosmos plays out as the company intends, it could be the launching pad for a rocket-propulsion of Nvidia’s robotics and self-driving car businesses.

Understand Nvidia Cosmos for Physical AI

Nvidia Depicts What Machines See in Training Simulations Generated with Cosmos Models

Nvidia

Nvidia calls Cosmos “a platform for accelerating physical AI development.” Simply put, physical AI includes humanoid robots designed to optimally navigate the world we live in, factory automation robots, and self-driving cars that are robots optimized to navigate the roads. You can think of it as the brain behind every robot. Transport people and various cargo. However, training robot AI requires significant effort and resources, often involving millions of hours of human interaction in real-world environments or millions of miles on real roads around the world. journeys need to be captured, labeled, and categorized.

Nvidia Cosmos builds on this resource using a family of what the company calls the “World Foundational Model,” an AI neural network that can generate accurate physically aware videos of the future state of a virtual environment (or in the case of a multiverse). It aims to partially solve the problem. you will. You can queue up for Doctor Strange right now. Jensen also mentioned Marvel characters during his keynote speech. It all sounds mind-bogglingly deep, but it’s actually quite simple. WFM is similar to large-scale language models, but whereas LLM is an AI model trained for natural language recognition, generation, translation, etc., WFM utilizes text, images, video content, and motion data. to generate accurate simulated virtual worlds and virtual world interactions. Spatial awareness, physics and physical interaction, and even object persistence. For example, if a bolt rolls off a table in a factory and is not visible in the current camera view, the AI model will know that the bolt is still there, but perhaps only on the floor.

Are you still with me? Okay, because this is where things get even more interesting. This new form of synthetic data generation for training physical AI or robots must be based on ground truth to be accurate. In other words, bad data means corrupted models that cause hallucinations or unreliable models to generate training data for robot AI. That’s where Nvidia Omniverse, which the company announced a few years ago, comes in.

Cosmos is built to interface with Nvidia Omniverse digital twins

Nvidia’s Huang details application of Cosmos AI World basic training model for robotics

Nvidia

Nvidia’s Omniverse digital twin operating system allows companies and developers in virtually any industry to integrate their products, factories, robots, You can simulate vehicles, etc. In fact, Nvidia also announced a new Omniverse “Blueprint” at CES 2025. It helps developers simulate spatial streaming to robot fleets (called Mega) for factories and warehouses, AV simulations, and Apple Vision Pro headsets for large-scale industrial digital. Twins, real-time computer-aided engineering and physics visualization. The company is bundling these with free educational courses in OpenUSD (Universal Scene Description), the language that powers Omniverse and enables the integration of industry-standard tools and content. Nvidia announced that several major companies have adopted its Omniverse platform, from semiconductor EDA design tool Cadence to computational fluid dynamics companies Altair and Ansys.

Returning to Cosmos, we see the integration of Nvidia’s full stack solution for physical AI in robotics. Cosmos models take input from the digitized real world and generate AI training content from it. The Cosmos model was developed from 20 million hours of training on video data, but as mentioned in Huang’s keynote, developers who want to train physical or robotic AI with their own digital twin and their own data. , you can simulate it in Omniverse and then let Cosmos do the work for you. It recreates a myriad of synthetic realities that these robot AIs can be trained on.

Is Cosmos the new CUDA moment for Nvidia?

At this point, I know what you’re thinking. What’s the problem with training robots on simulated data and a simulated world? There’s no question that this technology is still in its infancy, but as the old saying goes: You have to start somewhere. The advantage of machine learning is that it’s prone to hallucinations and requires guardrails (Nvidia has well-documented tools and policies for this), but you can keep training until you’re sure you’ve learned it correctly. That’s it. And this machine never sleeps or takes coffee breaks, not to mention it’s much more efficient than manually training an AI on human-generated and classified content. .

Nvidia CEO Jensen Huang on stage at CES 2025 to discuss the company’s three computer solutions … (+)

Nvidia

That said, a few years ago, when Nvidia first announced the CUDA programming language that sparked the era of machine learning on GPU acceleration, the company became something of a Johnny Appleseed, inviting developers from all walks of life to made their tools available to them and ultimately allowed it. We aim to become the de facto standard for accelerating AI workloads in data centers. Powered by Cosmos, Nvidia is once again making these generated AI World Foundational Models free to developers under an open model license and can be accessed through Hugging Face or the company’s own NGC catalog repository. These models will also soon be available as optimized Nvidia Inference Microservices (NIMs), all powered by the AI platform in DGX data centers and AI in robots and self-driving cars with AGX Drive Orin and Thor cars. Accelerated on edge devices. Computer platform for self-driving cars. Or as Huang and his company call it Nvidia’s “Robotics 3 Computer Solution.”

Based on Nvidia CEO Jensen Huang Hold Company’s new AGX Drive Thor autonomous vehicle platform … (+)

Nvidia

Nvidia is developing physical AI, from humanoid robotics companies like 1X and He points out that some of the largest companies in the world have already adopted Cosmos. We help you build AI models for the AV industry.

It would be a stretch to call this another “CUDA moment” for Nvidia, but the world leader in AI has released a very powerful new tool for physics AI developers for free. I personally think this is another masterstroke for Jensen Huang and his group of AI wizards. We’ll have to see how far this robot AI, multi-world rabbit hole goes with Cosmos, and it should be interesting.