project numbers

Nvidia

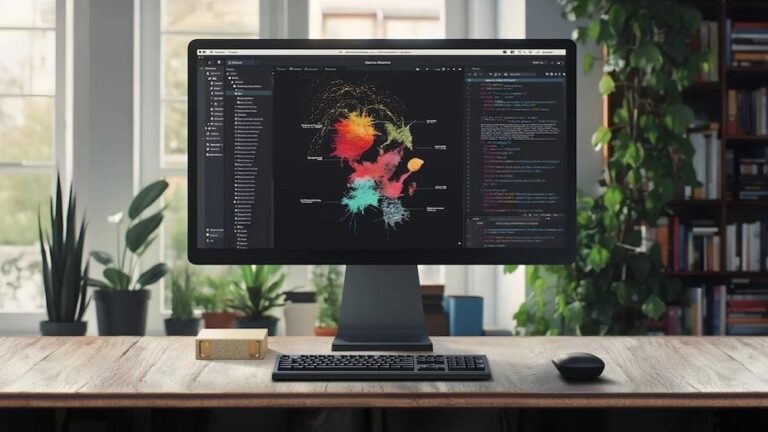

At CES 2025, Nvidia announced Project Digits, the first personal AI supercomputer. With the rise of generative AI, data scientists and AI engineers working on cutting-edge models and solutions need access to new generations of CPUs and GPUs. Digits is aimed at developers and data scientists looking for an affordable and accessible hardware and software platform to handle the lifecycle of their generative AI models. Digits has everything you need to build end-to-end generative AI solutions, from inference to fine-tuning to agent development.

Here’s a detailed analysis of Project Digits:

Starting at $3000, Nvidia Project Digits is a compact device powered by the revolutionary Nvidia GB10 Grace Blackwell superchip. This enables developers to locally prototype, fine-tune, and run large-scale AI models. This accessibility is an important step towards democratizing AI training, making it more accessible to individuals and small organizations.

project numbers

Nvidia

Independent software vendors can leverage Digits as an appliance to run AI-powered software deployed at customer locations. This reduces dependence on the cloud and provides unparalleled privacy, confidentiality, and compliance.

Hardware specifications: AI powerhouse on your desktop

At the heart of Digits is the Nvidia GB10 Grace Blackwell superchip, an engineering marvel that combines a powerful Blackwell GPU with a 20-core Grace CPU. These two powerful devices are interconnected using NVLink-C2C technology, a high-speed chip-to-chip interconnect that facilitates high-speed data transfer between the GPU and CPU. Think of it like a highway connecting two bustling cities, allowing for seamless and efficient communication. This tight integration is critical to Digits’ superior performance, allowing it to handle complex AI tasks quickly and efficiently.

The breakdown of the main specifications is as follows:

Blackwell GPU: Includes CUDA cores and 5th generation Tensor Cores to accelerate AI calculations. Nvidia Grace CPU: Featuring 20 power-efficient Arm cores to complement the GPU for balanced AI workloads. NVLink-C2C Interconnect: Provides high-bandwidth, low-latency connectivity between GPU and CPU for efficient data transfer. 128 GB of unified memory: A shared memory pool for the CPU and GPU eliminates data copies and speeds processing. Fast NVMe storage: Ensures quick access to data for training and running AI models. 1 petaflops of AI performance: Handle complex AI tasks and large-scale AI models. Power-efficient design: Operates using a standard wall outlet.

Digits also features 128 GB of integrated memory. This means that the CPU and GPU share the same memory pool, which eliminates the need to copy data back and forth, making processing much faster. This is especially beneficial for AI workloads that often involve large datasets and complex calculations. Digits includes high-speed NVMe storage to further improve performance and ensure quick access to the data you need to train and run your AI models.

Despite its impressive performance capabilities, Digits is designed with power efficiency in mind. Unlike traditional supercomputers, which often require specialized power and cooling infrastructure, Digits can operate using standard wall outlets. This makes it a practical and accessible solution for individuals and small teams who do not have access to the resources needed to run large, power-intensive systems.

Proven AI software stack powered by CUDA

Project Digits is designed to integrate seamlessly into Nvidia’s broader AI ecosystem, providing developers with a consistent and efficient environment for AI development. It is based on the Linux-based Nvidia DGX OS, ensuring a stable and robust platform tailored for high-performance computing tasks. Preloaded with Nvidia’s comprehensive AI software stack, including the Nvidia AI Enterprise software platform, Project Digits provides instant access to a wide range of familiar tools and frameworks essential for AI research and development.

The system is compatible with widely used AI frameworks and tools such as PyTorch, Python, and Jupyter Notebook, so developers can use a familiar environment to develop and experiment with models. It also supports the Nvidia NeMo framework, which enables fine-tuning of language models at scale, and the RAPIDS library, which accelerates data science workflows.

For connectivity and scalability, Project Digits employs Nvidia ConnectX networking to enable high-speed data transfer and efficient communication between systems. This feature allows two Project Digits units to be interconnected, effectively doubling the ability to handle models with up to 405 billion parameters. This scalability allows Project Digits to adapt to increasing computational demands as complex AI models grow.

Additionally, Project Digits is designed to seamlessly integrate cloud and data center infrastructure. Thanks to Nvidia’s consistent architecture and software platform across its ecosystem, developers can prototype and fine-tune AI models locally on the device and scale them up without running into compatibility issues. It can be expanded. This flexibility streamlines the transition from development to production, increasing efficiency and reducing time to deployment.

Nvidia Project Digits comes with the following software stacks:

Linux-based Nvidia DGX OS: Runs on a robust Linux-based operating system optimized for AI workloads, ensuring stability and performance. Preinstalled Nvidia AI software stack: Provides instant access to Nvidia’s wide range of AI tools and frameworks to streamline your development process. Nvidia AI Enterprise: Offers a suite of AI and data analytics software that ensures enterprise-grade support and security for your AI workflows. Nvidia NGC Catalog: Provides a rich repository of software development kits, frameworks, and pretrained models to facilitate efficient AI model development and deployment. Nvidia NeMo Framework: Enables fine-tuning and deployment of language models at scale and supports advanced natural language processing tasks. Nvidia RAPIDS Libraries: Accelerate your data science workflows by leveraging GPU-optimized libraries for data processing and machine learning. Support for popular AI frameworks: Compatibility with widely used tools such as PyTorch, Python, and Jupyter Notebook allows developers to work in a familiar environment.

With petaflops of AI performance and an integrated software stack, Project Digits can handle large-scale AI models with 200 billion parameters. While this functionality was previously limited to large supercomputers, Digits brings this functionality to the desktop, allowing developers to experiment and deploy cutting-edge AI models locally.

Project numbers ecosystem support

Nvidia CEO Jensen Huang highlighted the development of Digits and the important partnerships that played a key role in its creation. He highlighted the collaboration with leading fabless semiconductor company MediaTek in designing an energy-efficient CPU specifically for Digits. This partnership allowed Nvidia to leverage MediaTek’s expertise in low-power CPU design, contributing to Digits’ superior power efficiency.

Huang also highlighted how Digits bridges the gap between Linux and Windows environments. Although Digits itself runs on a Linux-based operating system, it is designed to integrate seamlessly with Windows PCs through Windows Subsystem for Linux technology. This makes it easy for developers who primarily work in Windows environments to harness the power of Digits for their AI projects.

Target audience and usage examples

Nvidia Digits is specifically designed for AI researchers, data scientists, students, and developers working with large-scale AI models. This allows these users to prototype, fine-tune, and run AI models locally, in the cloud, or within their datacenters, providing flexibility and control over their AI development workflows.

By bringing the power of an AI supercomputer to the desktop, Digits addresses the growing need for improved AI performance in a compact and accessible form factor. This allows individuals and small teams to tackle complex AI challenges without relying on expensive cloud computing resources or large-scale supercomputing infrastructure.

The potential use cases for Digits are vast and diverse. Developers can use it to prototype new AI applications, fine-tune LLM for specific tasks, generate AI-powered content, and research new AI algorithms and architectures. You can The ability to run large-scale AI models locally opens up new possibilities for AI development, enabling faster iteration and experimentation.

Nvidia Digits will be available from Nvidia and its partners in May for a starting price of $3,000. This competitive pricing makes it a viable option for a wider range of users and further contributes to the democratization of AI development.

conclusion

Nvidia Digits represents a major advancement in AI technology. Digits brings the power of an AI supercomputer to your desktop by combining powerful hardware, a comprehensive software stack, and a compact, power-efficient design. This has the potential to democratize AI development and make it more accessible to individuals, researchers, and small organizations. The ability to run large-scale AI models locally and the flexibility to deploy them in the cloud or data center gives developers unprecedented control and authority over their AI workflows. Once Digits becomes available, it will be exciting to see the innovative applications and advances that come from this powerful new platform.