Nvidia CEO Jensen Huang says the performance of his company’s AI chips is advancing faster than the historical rate set by Moore’s Law, which has driven advances in computing for decades. states.

“Our systems are advancing much faster than Moore’s Law,” Huang said in an interview with TechCrunch the morning after his keynote speech to a crowd of 10,000 people at CES in Las Vegas. He spoke at

Moore’s Law, proposed by Intel co-founder Gordon Moore in 1965, predicts that the number of transistors on computer chips will approximately double each year, effectively doubling the performance of those chips. did. This prediction largely came true, producing rapid advances in capabilities and plummeting costs over several decades.

In recent years, Moore’s Law has slowed down. But Huang argues that Nvidia’s AI chips are evolving at their own accelerated pace. The company says its latest data center superchip can run AI inference workloads more than 30 times faster than previous generations.

“You can build architectures, chips, systems, libraries, and algorithms all at the same time,” Huang says. “Then you can innovate across the stack and move faster than Moore’s Law.”

The Nvidia CEO’s bold claim comes at a time when many are wondering if progress in AI is stalling. Major AI labs such as Google, OpenAI, and Anthropic use Nvidia’s AI chips to train and run AI models, and advances in these chips could lead to further advances in AI model capabilities. there is.

This isn’t the first time Huang has suggested Nvidia has surpassed Moore’s Law. In a November podcast, Huang suggested that the world of AI is moving at a “hyper-Moore’s Law” pace.

Hwang rejects the idea that progress in AI is slowing. Instead, he argues that there are currently three active AI scaling laws. One is pre-training, the first training stage where the AI model learns patterns from large amounts of data. post training. Fine-tune the AI model’s answers using methods such as human feedback. The other is calculation during testing. This occurs during the inference phase, giving the AI model time to “think” after each question.

“Moore’s Law was very important in the history of computing because it lowers the cost of computing,” Huang told TechCrunch. “A similar thing happens with inference, resulting in improved performance and, as a result, a reduction in the cost of inference.”

(Of course, it helps Huang to say that, since Nvidia has ridden the AI boom to become the most valuable company on the planet.)

Nvidia’s H100 was the chip of choice for tech companies looking to train AI models, but now that tech companies are focusing more on inference, Nvidia’s expensive chip will continue to reign supreme. Some people question whether it can be maintained.

Currently, AI models that use test-time compute are expensive to run. There are concerns that OpenAI’s o3 model, which uses a scaled-up version of test-time compute, is too expensive for most people to use. For example, OpenAI spent nearly $20 per task using o3 to achieve human-level scores on general intelligence tests. A ChatGPT Plus subscription costs $20 for a full month.

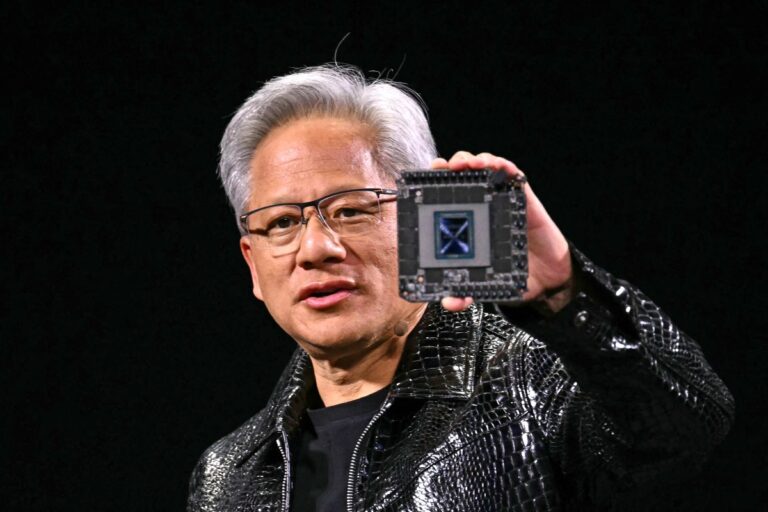

During Monday’s keynote, Huang held up Nvidia’s latest data center superchip GB200 NVL72 like a shield on stage. The chip runs AI inference workloads 30 to 40 times faster than Nvidia’s previous best-selling chip, the H100. Huang said this jump in performance means that AI inference models like OpenAI’s o3, which use large amounts of compute during the inference stage, will become cheaper over time.

Huang said the company is focused on developing higher-performance chips overall, and that higher-performance chips will yield lower prices in the long run.

“The direct and immediate solution to test-time computing, both in terms of performance and affordability, is to increase computing power,” Huang told TechCrunch. He pointed out that in the long term, AI inference models could be used to create better data before and after training AI models.

We’ve certainly seen the price of AI models plummet in the last year, in part due to computing breakthroughs from hardware companies like Nvidia. Huang says he expects this trend to continue with AI inference models, even though the first version of OpenAI was quite expensive.

More broadly, Huang claimed that today’s AI chips are 1,000 times better than those made 10 years ago. That’s far faster than the standards set by the Moore Act, and one fan says there’s no sign of it stopping anytime soon.

TechCrunch has a newsletter focused on AI. Sign up here to get it delivered to your inbox every Wednesday.