NVIDIA researchers announced Hymba 1.5B, an open source language model that combines transformers and state-space model (SSM) architectures to achieve unprecedented efficiency and performance. Designed using NVIDIA’s optimized training pipeline, Hymba addresses the computational and memory limitations of traditional transformers while enhancing the recall capabilities of SSM.

Traditional transformer-based language models are good at long-term recall and parallelization, but face major challenges in quadratic computation complexity and large memory requirements. On the other hand, SSMs such as Mamba and Mamba-2 offer certain complexity and hardware optimizations but have poor performance on memory call tasks. Hymba solves these tradeoffs by combining the best of both architectures.

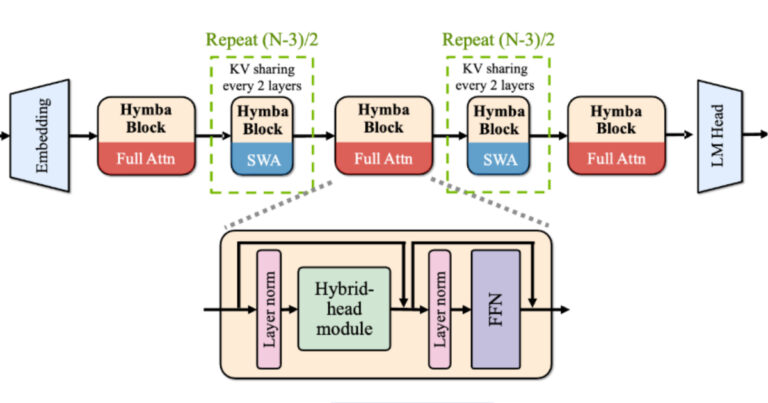

Hymba’s hybrid head module fuses an attention head for high-resolution recall and an SSM head for efficient context summarization, allowing both components to operate in parallel rather than sequentially. This design reduces computational and memory requirements without sacrificing performance.

The Hymba 1.5B architecture focuses on increasing efficiency while maintaining accuracy by introducing several innovative mechanisms.

Attention overhead reduction: More than 50% of attention calculations are replaced with SSM processing, reducing costs while maintaining task accuracy. Advantage of local attention: The combination of local attention and SSM sufficiently summarizes global information, so global attention is minimized. KV cache optimization: Hymba introduces cross-layer KV cache sharing, which reduces cache redundancy between layers and significantly reduces memory usage (compared to comparable transformer models). up to 1 in 10). Metatokens: A set of 128 learnable embeddings that are added before the prompt and act as memory initializers.

Source: NVIDIA Blog

The role of learnable metatokens is debated. Daniel Svonava, Machine Learning Specialist at Superlinked, asked the following question:

Can you explain how learnable metatokens improve the attention focusing mechanism compared to traditional methods?

Data scientist Marek Barak explained:

Attention has the problem of placing too much emphasis on the first token in a sentence. There is little semantic reason for this, since the first token does not contain much information. Using meta tokens results in a more balanced softmax distribution across tokens.

Hymba 1.5B has been proven to be a top performer in direct comparisons with leading models under 2 billion parameters, including Llama 3.2 1B, OpenELM 1B, and Qwen 2.5 1.5B. Hymba outperformed its competitors across benchmarks including MMLU, ARC-C, Hellaswag, and SQuAD-C.

Source: https://arxiv.org/pdf/2411.13676

NVIDIA has optimized Hymba’s training pipeline to balance task performance and efficiency. The pretraining strategy involved a two-step process. That means initial training on a diverse, unfiltered dataset, followed by fine-tuning on high-quality data. Instructional fine-tuning enhanced the model’s capabilities through stages such as supervised fine-tuning (SFT) and reinforcement learning with direct overriding optimization (DPO).

Hymba 1.5B is available as an open source release on Hugging Face and GitHub, allowing researchers and developers to test its features in real-world applications.