The pace of technological innovation has accelerated over the past year, especially in AI. And in 2024, there’s no better place to be a part of creating these breakthroughs than at NVIDIA Research.

NVIDIA Research is made up of hundreds of exceptional people who advance the frontiers of knowledge across many areas of technology, not just AI.

Last year, NVIDIA Research laid the foundation for future improvements in GPU performance with key research discoveries in circuits, memory architectures, and sparse computation. The team’s innovative graphics inventions continue to raise the bar for real-time rendering. And we’ve developed new ways to improve AI efficiency. This requires less energy, fewer GPU cycles, and provides better results.

But the most exciting development this year has been in generative AI.

Now you can generate not only images and text, but also 3D models, music, and sound. We are also developing better control over what is produced. This is to produce realistic humanoid movements or to produce a series of images with a consistent theme.

The scientific application of generative AI has resulted in high-resolution weather forecasts that are more accurate than traditional numerical weather models. AI models can now accurately predict how blood sugar levels will respond to different foods. Body-type generation AI is being used to develop self-driving cars and robots.

And that was just this year. Below, we detail NVIDIA Research’s best generative AI efforts in 2024. Of course, we continue to develop new models and methods for AI, and we expect even more exciting results in the coming year.

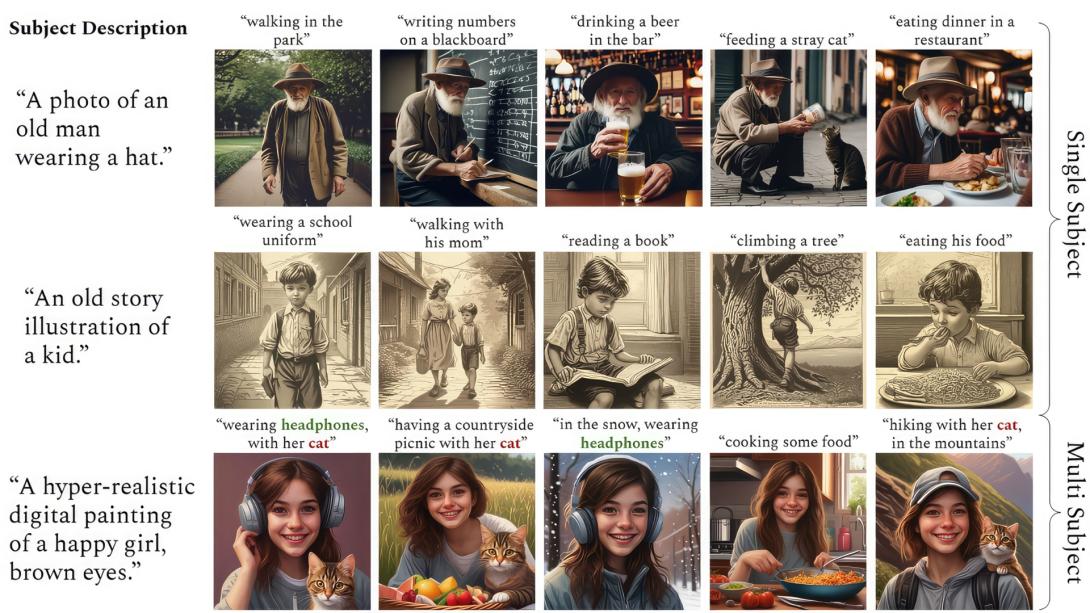

ConsiStory: An energetic image of the main character generated by AI

ConsiStory, a collaboration between NVIDIA and Tel Aviv University researchers, makes it easy to generate multiple images with a consistent protagonist. This is an essential feature for storytelling use cases such as comic book illustration and storyboard development.

The researchers’ approach introduced a technique called subject-driven shared attention, which reduced the time it takes to produce a coherent image from 13 minutes to about 30 seconds.

Read the ConsiStory paper.

Edify 3D: Generative AI takes to the next level

NVIDIA Edify 3D is a foundational model that allows developers and content creators to rapidly generate 3D objects that can be used to prototype ideas and populate virtual worlds.

Edify 3D helps creators quickly ideate, layout, and conceptualize immersive environments using AI-generated assets. Beginners and experienced content creators can leverage the model using text and image prompts. This model is part of the NVIDIA Edify multimodal architecture for developing visual generative AI.

Read the Edify 3D paper and watch the video on YouTube.

Fugatto: A flexible AI sound machine for music, voice, and more

A team of NVIDIA researchers recently announced Fugatto, a foundational generative AI model that can create or transform any combination of music, voice, and sound based on text or audio prompts.

This model can, for example, create music snippets based on text prompts, add or remove instruments from existing songs, change the accent or emotion of an audio recording, or generate entirely new sounds. . It may be used by music producers, advertising agencies, video game developers, or creators of language learning tools.

Read the Fugate paper.

GluFormer: AI predicts blood sugar levels 4 years from now

Tel Aviv-based startup Pheno.AI and researchers at NVIDIA’s Weizmann Institute of Science have developed GluFormer, an AI model that can predict an individual’s future blood sugar levels and other health indicators based on past blood sugar monitoring data. I led the development.

Researchers also found that by adding dietary intake data to the model, GluFormer can also predict how a person’s blood sugar levels will respond to specific foods or dietary changes, allowing for precision nutrition. I showed that. The research team validated GluFormer across 15 other datasets and found it generalizable to predict health outcomes in other groups, including prediabetes, type 1 and type 2 diabetes, gestational diabetes, and obesity. .

Read the GluFormer paper.

LATTE3D: Generate text to 3D shapes almost instantly

Another 3D generator released by NVIDIA Research this year is LATTE3D. LATTE3D converts text prompts into 3D representations in less than a second. It’s like a high-speed virtual 3D printer. Created in common formats used by standard rendering applications, the generated shapes can be easily served in virtual environments for developing video games, advertising campaigns, design projects, or virtual training grounds for robotics. Masu.

Read the LATTE3D paper.

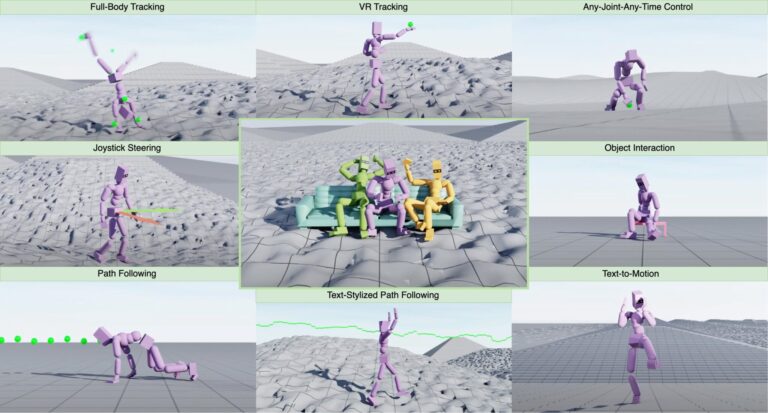

MaskedMimic: Reconstructing realistic movements of humanoid robots

To advance the development of humanoid robots, NVIDIA researchers are using MaskedMimic, an AI framework that applies inpainting (the process of reconstructing complete data from incomplete or masked views) to describe movement. Introduced.

Given partial information, such as a textual description of a movement or head and hand position data from a virtual reality headset, MaskedMimic can fill in the blanks and infer whole-body movements. It is part of NVIDIA Project GR00T, a research initiative to accelerate humanoid robot development.

Read the MaskedMimic paper.

StormCast: Enhance weather forecasts and climate simulations

In climate science, NVIDIA Research announced StormCast, a generative AI model that emulates atmospheric dynamics. While other machine learning models trained on global data have a spatial resolution of approximately 30 kilometers and a temporal resolution of 6 hours, StormCast achieves a temporal resolution of 3 kilometers.

The researchers trained StormCast on about three and a half years of NOAA climate data for the central United States. Applying precipitation radar, StormCast provides forecasts that are up to 10% more accurate than the U.S. and with a lead time of up to 6 hours. National Oceanic and Atmospheric Administration’s most advanced 3-kilometer regional weather prediction model.

Read the StormCast paper, written in collaboration with researchers at Lawrence Berkeley National Laboratory and the University of Washington.

NVIDIA research sets records in AI, self-driving cars, and robotics

Through 2024, models born at NVIDIA Research have set records across benchmarks such as AI training and inference, route optimization, autonomous driving, and more.

NVIDIA cuOpt, an optimization AI microservice used to improve logistics, has 23 world record benchmarks. The NVIDIA Blackwell platform demonstrated world-class performance in the MLPerf industry benchmark for AI training and inference.

In the self-driving vehicle space, Hydra-MDP, an end-to-end self-driving framework from NVIDIA Research, won first place in the End-to-End Driving at Scale Truck at CVPR 2024 Autonomous Grand Challenge.

In robotics, FoundationPose, a unified foundational model for pose estimation and tracking of 6D objects, ranked first on the BOP leaderboard for model-based pose estimation of invisible objects.

Learn more about NVIDIA Research, home to hundreds of scientists and engineers around the world. NVIDIA research teams focus on topics such as AI, computer graphics, computer vision, self-driving cars, and robotics.