Since its introduction, NVIDIA Hopper Architecture We’ve transformed the landscape of AI and high performance computing (HPC), helping businesses, researchers, and developers tackle the world’s most complex challenges with higher performance and greater energy efficiency.

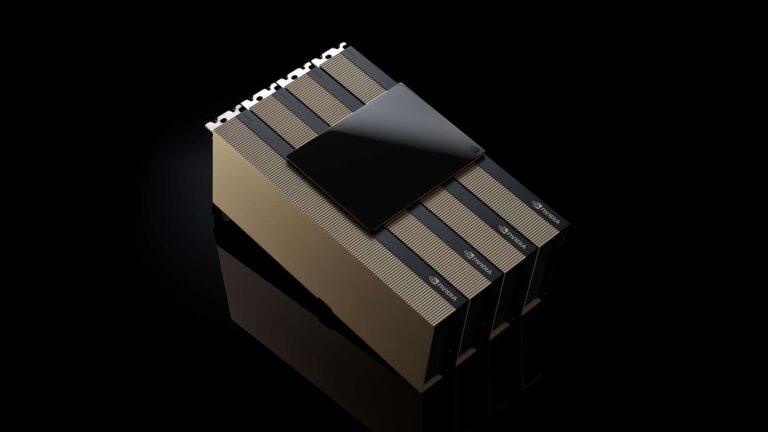

meanwhile, supercomputing 2024 At the conference, NVIDIA NVIDIA H200 NVL PCIe GPU — The newest addition to the Hopper family. The H200 NVL is ideal for organizations with data centers seeking a low-power, air-cooled enterprise rack design with flexible configurations to accelerate all AI and HPC workloads, regardless of size.

According to recent research, approximately 70% of enterprise racks are 20kW or less and use air cooling. Therefore, PCIe GPUs are a must as they provide granularity for node deployment, whether you use one, two, four, or eight. GPU — Enabling data centers Pack more computing power into less space. Businesses can use their existing racks and choose the number of GPUs that best suits their needs.

Enterprises can use the H200 NVL to accelerate AI and HPC applications while improving energy efficiency by reducing power consumption. With 1.5x more memory and 1.2x more bandwidth than the NVIDIA H100 NVL, enterprises can fine-tune their LLM within hours with the H200 NVL and achieve up to 1.7x faster inference performance . For HPC workloads, performance increases up to 1.3x compared to H100 NVL and 2.5x compared to NVIDIA Ampere architecture generations.

Complementing the raw power of the H200 NVL are: NVIDIA NV Link technology. The latest generation of NVLink offers: GPU to GPU 7x faster communication than 5th generation PCIe – Deliver high performance for HPC, large language model inference, and fine-tuning needs.

NVIDIA H200 NVL is combined with powerful software tools that enable enterprises to accelerate applications from AI to HPC. Comes with a 5-year subscription. NVIDIA AI Enterprisea cloud-native software platform for production AI development and deployment. NVIDIA AI Enterprise includes: NVIDIA NIM Microservices for secure and reliable deployment of high-performance AI model inference.

Companies harnessing the power of H200 NVL

With the H200 NVL, NVIDIA provides enterprises with a full-stack platform to develop and deploy AI and HPC workloads.

Our customers are working with industries such as visual AI agents and chatbots for customer service, trading algorithms for finance, medical image processing to improve anomaly detection in healthcare, pattern recognition for manufacturing, and seismic image processing for the federal government. It has a significant impact on multiple AI and HPC use cases across the board. Scientific organization.

drop box uses NVIDIA accelerated computing for its services and infrastructure.

“drop box Processing large volumes of content requires advanced AI and machine learning capabilities,” said Ali Zafar, VP of Infrastructure at Dropbox. “We are looking at the H200 NVL to continually improve our services and bring more value to our customers.”

of University of New Mexico has used NVIDIA accelerated computing in a variety of research and academic applications.

“As a public research university, our commitment to AI puts the university at the forefront of scientific and technological advances,” said Professor Patrick Bridges, director of the UNM Center for Advanced Research and Computing. “Moving to the H200 NVL will enable us to accelerate a variety of applications including data science efforts, bioinformatics and genomics research, physics and astronomy simulations, climate modeling, and more.”

H200 NVL is available across the ecosystem

Dell Technologies, hewlett packard enterprise, lenovo and super micro is expected to offer a wide range of configurations to support the H200 NVL.

Additionally, the H200 NVL will be available on the following platforms: Evre, ASRock Rack, ASUS, gigabyte, inglasis, inventec, MSI, pegatron, QCT, Wistron and Wiwin.

Some systems are NVIDIA MGX Modular architecture allows computer manufacturers to quickly and cost-effectively build a vast array of data center infrastructure designs.

Platforms featuring the H200 NVL will be available from NVIDIA’s global system partners starting in December. To complement availability from key global partners, NVIDIA also Enterprise reference architecture For H200 NVL system.

The reference architecture incorporates NVIDIA expertise and design principles to enable partners and customers to design and deploy high-performance AI infrastructure at scale based on the H200 NVL. This includes full-stack hardware and software recommendations, along with detailed guidance on optimal server, cluster, and network configurations. The network is optimized for maximum performance. NVIDIA Spectrum-X Ethernet platform.

NVIDIA technology will be on display on the showroom floor at SC24 at the Georgia World Congress Center through November 22nd. Watch NVIDIA’s video to learn more. special address.

look news About software product information.