The November Top 500 supercomputers rankings being talked about this week at the SC24 conference in Atlanta are far larger than the list announced in June at the ISC24 conference in Hamburg, Germany, in May. There were fluctuations. There are some interesting developments in new machinery being installed.

The big news, of course, is that the long-awaited “El Capitan” system, built by Hewlett-Packard Enterprise with AMD’s hybrid CPU-GPU computing engine, is now live and, as expected, the new top flopper in the rankings. That’s what happened. . And it has a large difference in specifications from its competitors in the United States and the exascale-class machines rumored to be in China.

A sizable portion of El Capitan (as of this writing, we still don’t know how big that portion is) has 43,808 AMD “Antares-A” Instinct MI300A devices (our calculations ) was tested by Lawrence Livermore National Laboratory. The theoretical peak performance of some El Capitan tested using HPL is 2,746.4 petaflops, which is significantly higher than 2.3 exaflops to 2.5 exaflops. The exaflops we expected. (Of course, this is for 64-bit precision floating-point operations.) Peak sustained performance in the HPL test is 1,742 petaflops, resulting in a computational efficiency of 63.4 percent. This is about the level of efficiency we expect when new acceleration systems come to market (the touchstone is 65 percent), and the theory is that El Capitan will stand up to the benchmark in subsequent rankings in 2025. I expect that he will further demonstrate his abilities. This system is well on its way to being accepted by Lawrence Livermore.

As a reminder, MI300A was announced in December 2023 along with its sibling MI300X (with 8 GPU chiplets and no CPU cores). The MI300A has three chiplets with a total of 24 ‘Genoa’ Epyc cores and six chiplets with Antares GPU streaming. Multiprocessor running at 1.8 GHz. In the Cray EX system, all MI300A compute engines are linked together using HPE’s “Rosetta” Slingshot 11 Ethernet interconnect. Overall, the GPU chiplets in the tested section of El Capitan have 1.05 million Genoa cores and just under 10 million streaming multiprocessors. This obviously requires managing a huge amount of concurrency. But that’s not strange. The Sunway “TaihuLight” supercomputer at the National Supercomputing Center in Wuxi, China, has been in the top 500 rankings since 2016 and remains the world’s leading (at least among machines tested using HPL) It is the 15th most powerful machine. The total was 10.65. 1 million cores.

After Lawrence Livermore’s briefing at SC24, we’ll be doing a separate deep dive into the architecture of the El Capitan machine, and we’ll be cross-linking to that story here.

Each Top500 list has a mix of old and new machines, and as new machines are tested using HPL and their owners submit their results, the less powerful machines from the previous list are removed and added to the Top500 universe. is no longer part of. They are still used today. In addition, the list includes many machines in the US, Europe, and China that do not do HPC simulation or modeling as a core business. This is because companies and their OEM partners want to manipulate the list. Having HPL information on general purpose clusters is interesting, but it skews the supposed supercomputer rankings. To be honest, for a long time we’ve only seen the top 50 machines as true supercomputers, and we’ve been looking to come up with some way to make this ranking more useful.

Back in June, we decided to look at just the new entrants on the list, trying to use this as a gauge of what was happening in HPC. And we break down the rankings again for November 2024 to see what people have bought and tested recently. There are some interesting trends, and I’d like to keep an eye on changes like this in the future.

The June 2024 ranking includes 49 new machines in the Top500 list, with 7 new supercomputers out of a total peak performance of 1,226.7 petaflops in 64-bit floating point precision across these new machines. Included. These were actually supercomputers doing HPC work. Based on Nvidia’s “Grace” Arm server CPUs and “Hopper” H100 GPU accelerators, they accounted for a total of 663.7 petaflops, or 54.1 percent of the capacity added to the June 2024 list. Systems combining AMD Epyc processors and Nvidia GPUs will account for an additional 8.1% of new computing power, and systems combining Intel Xeon processors and Nvidia GPUs will be introduced between late November 2023 and early June 2024. and accounted for a further 17.5% of the abilities tested using HPL. . There are an additional 23 all-CPU machines, which are still required in many HPC environments for software compatibility, but the total compute on these machines is still only 12.1 percent of all new 64-bit flops.

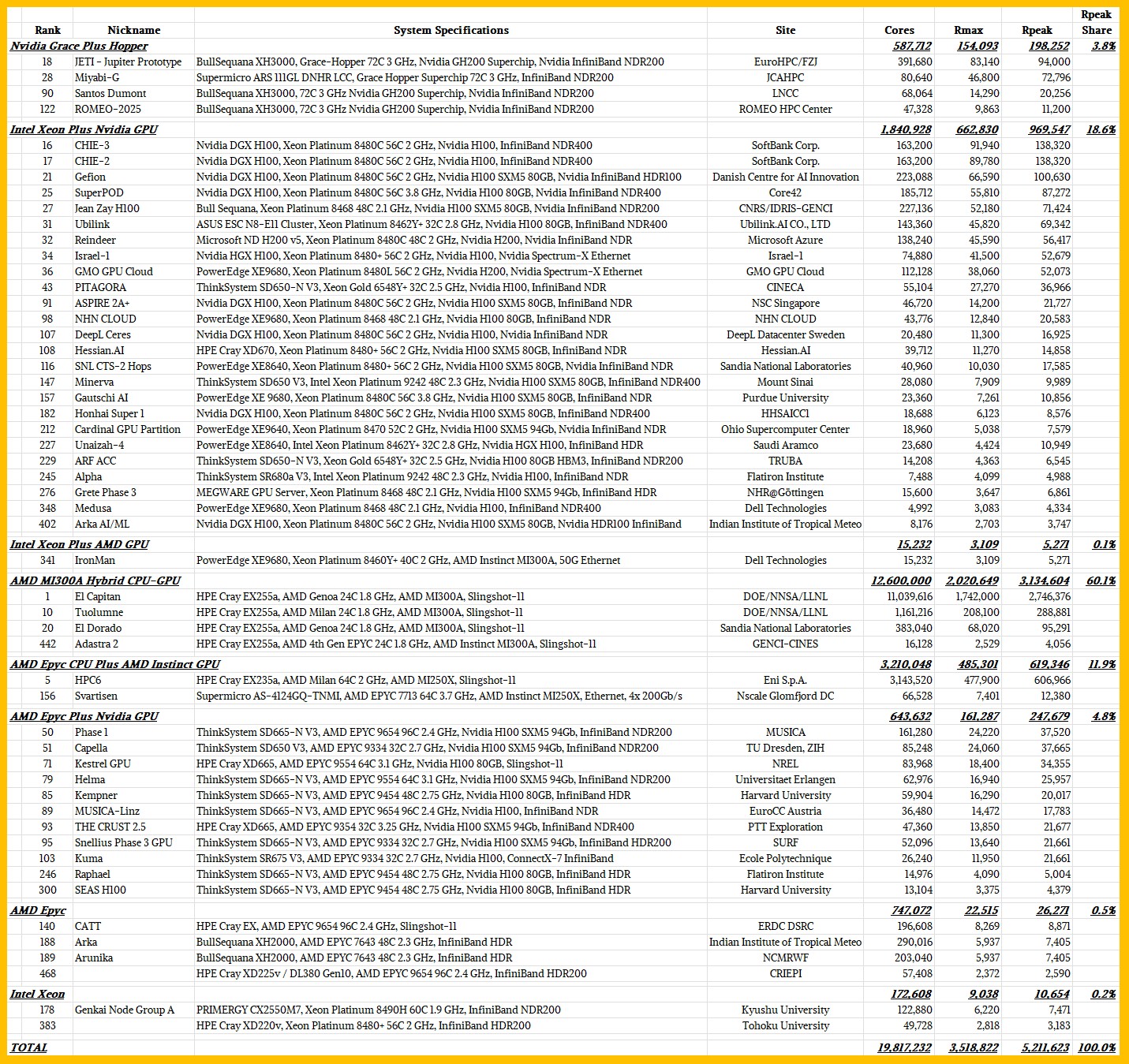

This time, in the November 2024 Top500 rankings, AMD is the big winner when it comes to adding capacity to HPC bases. There are 61 new machines in the list, sorted here by compute engine architecture.

This time, there are only four new Grace-Hopper systems, and their size is fairly modest, accounting for only 3.8 percent of the total peak performance of 5,211.6 petaflops for the new machines listed.

However, there are 25 new machines with Intel Xeon CPUs as hosts and Nvidia GPUs as offload engines, which add up to a total of 969.6 petaflops of compute, or 18.6 of the total new compute listed. It will be a percentage. Interestingly, Dell built a 5.3 petaflops machine for itself, nicknamed “IronMan,” that combines AMD Instinct MI300A accelerators with Intel Xeon CPUs. (Why?) We also have 11 machines with AMD Epyc CPU hosts that support Nvidia GPU accelerators, delivering a total peak output of 247.7 petaflops. Total Nvidia GPU machines accounted for 39% of the total compute added to the Top500 list in November 2024.

Now, El Capitan and its smaller four siblings, based on the MI300A hybrid computing engine, deliver 3,134.6 petaflops of FP64 power, accounting for 60.1 percent of total compute, making it a bright new addition to the current Top 500 list. brought. Thanks in large part to the HPC6 machine located at Eni SpA in Italy, which I wrote about in January, it is essentially a “frontier” supercomputer located at Oak Ridge National Laboratory in the United States. It is a smaller clone of , with an additional 619.3 petaflops. It joins the November list from two machines based on AMD CPUs paired with AMD MI250X GPUs.

Adding all this up, AMD GPUs accounted for 72.1 percent of the new performance added in the November 2024 rankings.

Now let’s expand our horizons to November’s complete Top500 list and take a look at all 209 acceleration systems on the list. See how this breaks down in this beautiful treemap.

The box size in the graph above represents the total sustained performance in HPL.

The green region on the top left is powered by El Capitan and Frontier and includes all machines that use a combination of AMD CPUs and GPUs. Nvidia Grace-Hopper machines are in the top right, and machines with various Nvidia GPUs are in blue, gray, and red boxes. Intel GPU machines (of which there are few) are teal in the bottom right, and burnt orange machines are CPU-only systems.

Just for fun, we’ve sorted the 209 accelerator machines listed by accelerator type and architecture by system count, peak teraflops, and total core count. Look at this:

Currently, only 4 systems (1.9% of 500) use the Intel “Ponte Vecchio” Max GPU accelerator, but it accounts for 14% of peak performance, the overwhelming majority of which is installed on the “Aurora” ” system. At Argonne National Laboratory.

There are 183 machines using Nvidia GPUs on all types of hosts, accounting for 87.6 percent of the accelerated installed machines on the November 2024 list, but the total peak capacity at FP64 precision Only 40.3 percent. There are 19 machines that use AMD GPUs for the majority of their compute, but this is only 9.1% of the accelerated machines. However, this represents 44.9% of FP64’s total peak production capacity. Thanks to El Capitan, Frontier, HPC6, and 16 other machines, AMD beat Nvidia in this area of the Top 500 list.

Looking at all 500 machines on the November 2024 list, acceleration systems account for 41.8 percent of the machines, 83.4 percent of the total 17,705 petaflops, and 55.4 percent of the total 128.6 million cores and streaming multiprocessors .

See broader trends

Breaking through the exascale barrier was more difficult than many thought. This is primarily due to budget and power constraints, and not due to any underlying technical issues. Chinese exascale machines (‘Tianhe-3’ and ‘OceanLight’) are not ranked as they have not submitted official HPL performance results to the Top500 organizers, but if you are concerned about power consumption or If not, given the cost of the machines, bringing exascale machines into the field years ago was not only possible, it was already done. (Our best guess a year ago was that Tianhe-3’s FP64 peak performance was 2.05 exaflops and OceanLight’s 1.5 exaflops.)

The bottom of the Top500 list has historically had a very hard time keeping up with the log plots we’ve come to expect from HPC systems. There is no reason to believe that if 10 exaflops or higher machines become available in the future, they will raise the class average. If we want to get back on the logarithmic curve, we need to bring the price of machines down, and even though the cost per unit of performance continues to go down, machine prices are going up.

This time, to make it into the Top500, we needed a machine with at least 2.31 petaflops on the HPL benchmark. The top 100 result was 12.8 petaflops. Interestingly, the total HPL performance listed is 11.72 exaflops, up from 8.21 exaflops in June 2024, 7.01 exaflops in November 2023, and 5.24 exaflops in June 2023. While these large machines are driving aggregate performance, smaller HPC centers are not. Across the 500 machines on our list, we’re adding capacity at a rate that doubles every two years. This may or may not coincide with the rise of HPC on the cloud. It’s hard to say without data from the cloud builder.