Amazon is poised to roll out its latest artificial intelligence chips as Big Tech groups seek returns on their multibillion-dollar semiconductor investments and reduce their dependence on market leader Nvidia.

Amazon’s cloud computing executives are spending millions on custom chips in hopes of increasing efficiency within dozens of data centers and ultimately lowering not only their own costs but also those of Amazon Web Services customers. are investing in the cost of

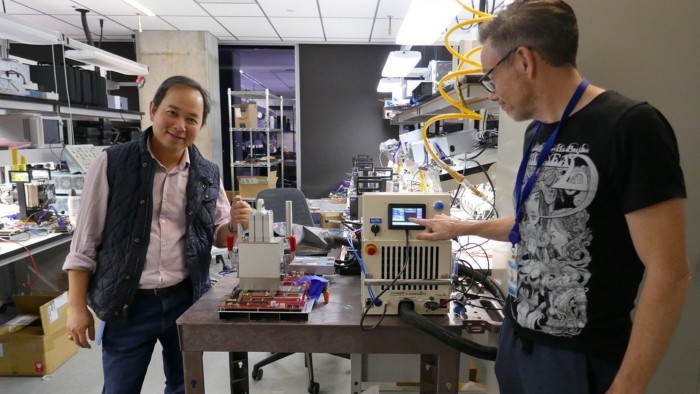

The effort is being led by Annapurna Labs, an Austin-based chip startup that Amazon acquired for $350 million in early 2015. Annapurna’s latest work will be unveiled next month when Amazon announces widespread availability of Trainium 2, a piece of AI chip aimed at training the largest models.

Trainium 2 is already being tested by OpenAI competitor Anthropic, which has $4 billion in backing from Amazon, as well as Databricks, Deutsche Telekom, Japan’s Ricoh, and Stockmark.

AWS and Annapurna’s goal is to take on Nvidia, one of the world’s most valuable companies due to its dominance of the AI processor market.

“We want to be the absolute best place to operate Nvidia,” said Dave Brown, vice president of compute and networking services at AWS. “But at the same time, we think it’s healthy to have alternatives.” Amazon says its other product line of specialized AI chips, Inferentia, are capable of running AI models to generate responses. The cost is already 40% lower.

“When it comes to machine learning and AI, the prices[of cloud computing]tend to be much higher,” Brown said. “If you save 40 percent of $1,000, that doesn’t really influence your choice. But if you save 40 percent of tens of millions of dollars, it does.”

Amazon currently expects to spend about $75 billion in capital expenditures in 2024, with the majority going to technology infrastructure. In the company’s latest earnings call, CEO Andy Jassy said he expects spending to increase further in 2025.

This represents a sharp increase in 2023, when they spent $48.4 billion for the year. All of the biggest cloud providers, including Microsoft and Google, are ramping up spending on AI, with little sign of slowing down.

Amazon, Microsoft, and Meta are all big customers of Nvidia, but they’re also designing their own data center chips to lay the foundation for what they hope will be a wave of AI growth.

“All the major cloud providers are moving aggressively towards a more verticalized and, if possible, homogenized and integrated (chip technology) stack,” said Futurum Group’s Daniel Newman.

“Everyone from OpenAI to Apple is trying to build their own chips,” Neumann noted, as they seek “lower production costs, higher profit margins, increased availability, and more control.” He said there was.

“It’s not just a chip issue, it’s a whole system issue,” said Rami Shinno, Annapurna’s director of engineering and a veteran of SoftBank’s Arm and Intel.

For Amazon’s AI infrastructure, this means building everything from the ground up, from the silicon wafers to the server racks they sit in, all powered by Amazon’s proprietary software and architecture. “It’s really hard to do what we do at scale. There aren’t that many companies that can do it,” Shinno said.

Recommended

After starting out building a security chip for AWS called Nitro, Annapurna has developed several generations of Graviton. It is a low-power Arm-based central processing unit that replaces traditional server workhorses from Intel and AMD.

“The big advantage of AWS is that the chips will use less power, potentially making data centers a little more efficient,” said G Dan Hutcheson, an analyst at TechInsights. If Nvidia’s graphics processing unit is a powerful general-purpose tool (in automotive parlance, it’s like a station wagon or station wagon), Amazon says it can optimize its chips for specific tasks or services, like compact cars and hatchbacks. he said.

But so far, AWS and Annapurna have done little to undermine Nvidia’s dominance in AI infrastructure.

Nvidia recorded revenue of $26.3 billion in AI data center chip sales in the second fiscal quarter of 2024. This number is the same as what Amazon announced for its entire AWS division in its second fiscal quarter. Only a relatively small portion of it may be responsible. It will be available to customers running AI workloads on Annapurna’s infrastructure, Hutcheson said.

When it comes to the raw performance of its AWS chips compared to Nvidia, Amazon avoids direct comparisons and does not submit its chips to independent performance benchmarks.

Recommended

Patrick Moorhead, chip consultant at Moor Insights & Strategy, said, “Benchmarks are good for the initial stage of, ‘Hey, I might consider this chip,’ but the real test is to run them across multiple racks. Now is the time to set up all of them together. fleet”.

Moorhead said he is confident that Amazon’s claims of a 4x performance improvement between Trainium 1 and Trainium 2 are accurate after years of scrutinizing the company. But performance numbers may be more important than simply offering customers more choice.

“People appreciate all the innovations Nvidia has brought, but no one is happy that Nvidia has 90 percent market share,” he added. “This won’t last long.”