This is not investment advice. The author has no position in any stocks mentioned. Wccftech.com has a Disclosure and Ethics Policy.

According to a report in the Financial Times, Amazon is developing a custom artificial intelligence chip to reduce its dependence on NVIDIA. The company has already developed a variety of in-house processors to run data center workloads, and this effort is part of a 2015 investment in chip design startups. Amazon is expected to shed more light on its custom AI processor next month. Part of the announcement covering the company’s Trainium chip lineup.

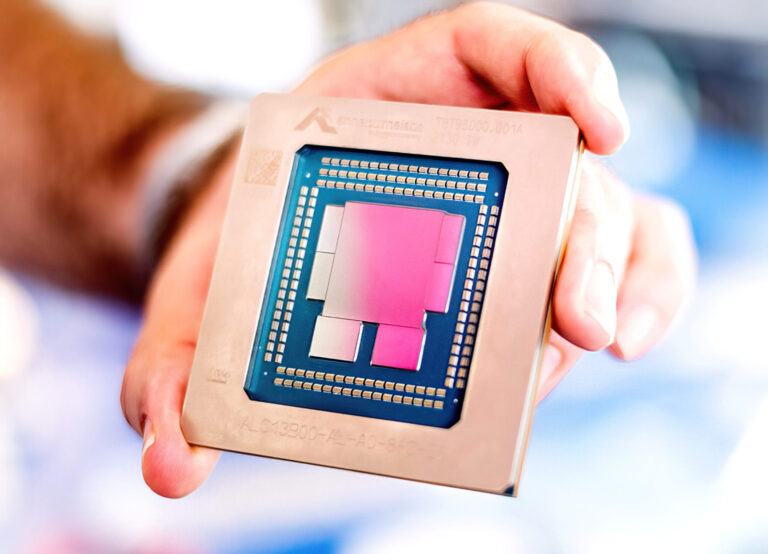

The chips were developed by Amazon’s Annapurna Labs and are used by Microsoft-backed OpenAI rival Anthropic. Anthropic is Amazon’s primary AI partner, providing the e-commerce and cloud computing giant with access to Claude’s foundational AI models.

Amazon drives adoption of custom AI chips to lower costs and dependence on NVIDIA

Today’s report is just one of many demonstrating the push by major technology companies to reduce their reliance on NVIDIA for their most powerful artificial intelligence processors. NVIDIA GPUs are market leaders and provide top performance for running AI workloads. As a result, high demand and limited supply make them one of the most popular and expensive products in the world.

For Amazon, developing in-house AI chips is an effort to reduce its dependence on NVIDIA products and cut costs at the same time, the Financial Times reported. The company is no stranger to custom chip development. The acquisition of chip design startup Annapurna allows Amazon to consistently produce high-volume processors and reduce the cost of using AMD and Intel products for traditional data center workloads.

These chips, called Graviton processors, are complemented by Amazon’s custom AI processor called Trainium. Trainium is designed to work with large language models, and Amazon announced Trainium2 a year ago in November 2023.

According to FT, Annapurna is also leading chip development efforts to reduce Amazon’s reliance on NVIDIA GPUs. The FT report shares few details about these chips, but outlines that Amazon could provide insight about them at an event featuring the Trainium2 chips next month. Trainium2 was launched in 2023, but supply constraints have limited its adoption. The FT reports that Amazon’s AI partner Anthropic is using Trainium2.

Amazon’s chips are designed using technology from Taiwanese company Alchip. They are manufactured by Taiwan Semiconductor Manufacturing Company (TSMC), and Amazon shared last year that more than 50,000 AWS customers are using its Graviton chips.

In addition to Amazon, other giants such as Google parent Alphabet and Facebook owner Meta are also developing their own AI chips. Industry players such as Apple are using Google’s chips, and Meta announced the second-generation Meta Training and Inference Accelerator (MTIA) earlier this year. All of these will reduce dependence on NVIDIA’s GPUs, and Microsoft-backed OpenAI is also reportedly considering developing its own chips.

Earlier this month, Google announced its latest tensor processing unit (TPU) AI chip, Trillium. The company says these chips deliver four times faster AI training performance and three times faster inference than previous chips.