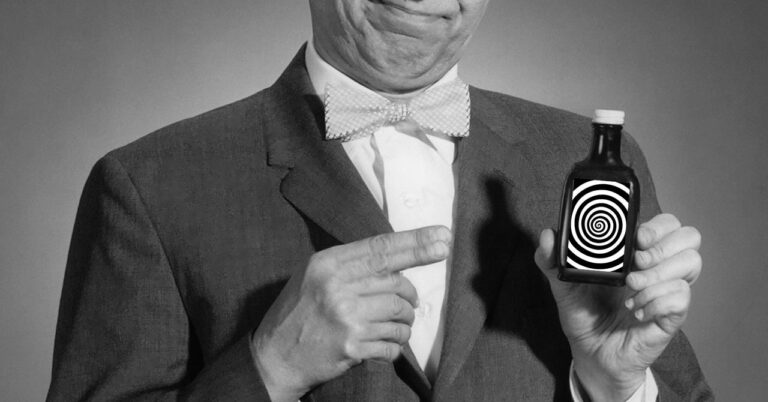

Arvind Narayanan The Princeton University computer science professor is best known for denouncing the hype surrounding artificial intelligence in his Substack piece “AI Snake Oil,” co-written with his doctoral student, Sayyash Kapur. The two authors recently published a book based on a popular newsletter about the shortcomings of AI.

But make no mistake: They’re not against the use of new technology. “Our message can easily be misconstrued as saying all AI is harmful or suspicious,” says Narayanan. Speaking to WIRED, he clarifies that his criticism isn’t directed at the software itself, but at the culprits who continue to spread misleading claims about artificial intelligence.

In AI Snake Oil, the culprits perpetuating the current hype cycle are divided into three core groups: the companies that sell AI, the researchers that study AI, and the journalists that report on AI.

Hype Superspreader

Companies that claim to use algorithms to predict the future are ranked as the most likely to be fraudulent. “When predictive AI systems are deployed, minorities and those already in poverty are often the first to suffer,” Narayanan and Kapoor write in their book. For example, an algorithm previously used by a Dutch local government to predict potential welfare fraudsters mistakenly targeted women and immigrants who didn’t speak Dutch.

The authors are also skeptical of companies that focus primarily on existential risks, such as artificial general intelligence, the concept of super-powerful algorithms with better working capabilities than humans. But they don’t scoff at the idea of AGI. “When I decided to become a computer scientist, being able to contribute to AGI was a big part of my own identity and motivation,” Narayanan says. The discrepancy stems from companies prioritizing long-term risk factors over the impact of AI tools on people today, a story they often hear from researchers.

The authors argue that much of this hype and misunderstanding is also down to shoddy, irreproducible research. “We find that in many fields, issues with data leaks have led to overly optimistic claims about AI’s effectiveness,” Kapoor says. Data leaks essentially involve testing an AI using some of the model’s training data, akin to handing out answers to students before administering an exam.

While academics are portrayed in AI Snake Oil as making “textbook mistakes,” journalists are more malicious and deliberately wrong, say Princeton University researchers: “Many articles are paraphrased press releases and laundered as news.” Journalists who avoid honest reporting to maintain relationships with big tech companies and protect their access to company executives are identified as particularly harmful.

I think the criticism of access journalism is valid. In retrospect, I could have asked tougher or smarter questions in my interviews with the people behind AI’s most important companies. But maybe the authors are oversimplifying the issue here. Just because a big AI company invited me on board doesn’t stop me from writing skeptical articles about their technology or investigative pieces that I know will upset them (yes, even if I struck a business deal with WIRED’s parent company, as OpenAI did).

Sensationalized news stories can also mislead people about AI’s true capabilities. Narayanan and Kapoor point to New York Times columnist Kevin Roos’s 2023 chatbot transcript of an interaction with a Microsoft tool, “Bing’s AI Chat: ‘I Want to Be Alive 😈’,” as an example of journalists stoking public confusion about perceptual algorithms. “Roos is one of the people who wrote these articles,” Kapoor says. “But I think it’s going to have quite an impact on the public psyche when we see headline after headline about chatbots wanting to come to life.” Kapoor points to the 1960s ELIZA chatbot, whose users were quick to anthropomorphize the crude AI tool, a prime example of the persistent urge to project human qualities onto mere algorithms.