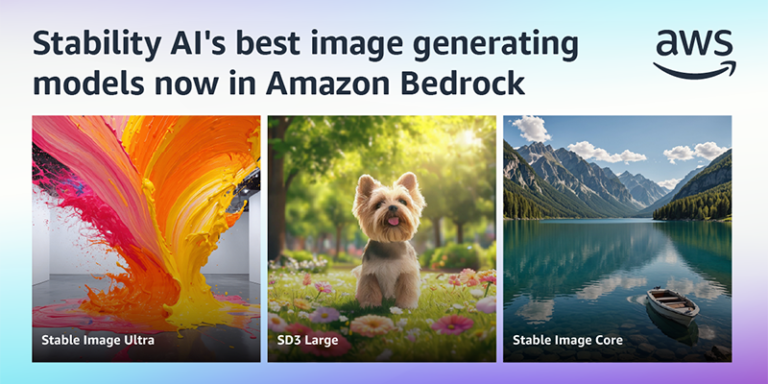

Starting today, Amazon Bedrock is enabling the use of three new text-to-image models from Stability AI – Stable Image Ultra, Stable Diffusion 3 Large, and Stable Image Core. These models provide significant performance improvements in multi-subject prompts, image quality, and typography, enabling you to quickly generate high-quality visuals for a variety of use cases, including marketing, advertising, media, entertainment, and retail.

These models excel at producing stunning photorealistic imagery, boasting superior detail, color and lighting, and address common challenges such as rendering realistic hands and faces. Their advanced and rapid understanding of models allows them to interpret complex instructions involving spatial reasoning, composition and style.

The three new Stability AI models available on Amazon Bedrock cover a range of use cases:

Stable Image Ultra – Produces the highest quality photorealistic output ideal for professional print media and large format applications. Stable Image Ultra excels in rendering superior detail and realism.

Stable Diffusion 3 Large – Balances generation speed and output quality. Ideal for creating large volumes of high-quality digital assets such as websites, newsletters, and marketing materials.

Stable image core – Optimized for fast, affordable image generation, perfect for quickly iterating on concepts during ideation.

This table summarizes the main features of the model.

Features Stable image Super stable diffusion 3 large stable images Core parameters 16 billion 8 billion 2.6 billion Input text Text or image Text typography Customization

Large-scale display

Large display for versatility and ease of reading

Various sizes and applications Visual

Aesthetics Photorealistic

The image output is very realistic.

Excellent rendering with great attention to detail.

Don’t pay attention to the details

One of the main improvements of Stable Image Ultra and Stable Diffusion 3 Large compared to Stable Diffusion XL (SDXL) is the quality of text in the generated images: the innovative Diffusion Transformer architecture implements two separate sets of weights for image and text, while still allowing information to flow between the two modalities, resulting in fewer spelling and typographical errors.

Here are some images created using these models.

Stable Image Ultra – Prompt: Photo, realistic, woman sitting in a field watching a kite fly in the sky, stormy sky, highly detailed, concept art, intricate, professional composition.

Stable Diffusion Big 3 – Prompt: Cartoon style illustration, male detective standing under street lamp, noir city, wearing trench coat, fedora hat, dark and rainy, neon signs, reflections on wet pavement, detailed moody lighting.

Stable Image Core – Prompt: A high quality, photorealistic, professional 3D rendering of a pair of white and orange sneakers, floating, hovering, levitating in the center.

Use cases for new stable AI models in Amazon Bedrock

Text-to-image models offer transformative potential for companies across industries, dramatically streamlining the creative workflows of marketing and advertising departments, enabling them to rapidly generate high-quality visuals for campaigns, social media content, and product mockups. By speeding up the creative process, companies can respond faster to market trends and reduce time to market for new initiatives. Additionally, these models provide instant visual representations of concepts that enhance brainstorming sessions and inspire further innovation.

E-commerce businesses can use AI-generated images to create diverse product showcases and personalized marketing materials at scale. In the field of user experience and interface design, these tools can quickly create wireframes and prototypes to accelerate the design iteration process. Adopting text-to-image models can bring significant cost savings, productivity gains, and competitive advantage in visual communications across various business functions.

Here are some use cases across different industries:

Advertising and marketing

Use Stable Image Ultra for luxury brand advertising and photorealistic product showcases, Stable Diffusion 3 Large for high quality product marketing images and print campaigns, and Stable Image Core for rapid A/B testing of visual concepts for social media advertising.

E-commerce

We use Stable Image Ultra for premium product customization and bespoke items, Stable Diffusion 3 Large for most product visuals across our e-commerce site, and Stable Image Core to quickly generate product images and keep listings up to date.

Media and Entertainment

Stable Image Ultra for ultra-realistic key art, marketing materials and game visuals; Stable Diffusion 3 Large for environment textures, character art and in-game assets; Stable Image Core for rapid prototyping and concept art exploration

So let’s take a look at how these new models work in practice, first using the AWS Management Console, and then using the AWS Command Line Interface (AWS CLI) and AWS SDKs.

Use the new Stability AI model in the Amazon Bedrock console

In the Amazon Bedrock console, select Model Access from the navigation pane and enable access to the three new models in the Stability AI section.

Now that you have access, select (Image) in the Playgrounds section of the Navigation Pane, and for your model, select Stability AI and Stable Image Ultra.

When prompted, enter the following:

A stylized image of a cute old steampunk robot holding a sign in its hands that reads “Stable Image Ultra in Amazon Bedrock” written in chalk.

Leave all other options at their default values and select Run. After a few seconds, you will see what you asked for. Here is the image:

Using Stable Image Ultra with the AWS CLI

While you’re still in the image playground in the console, choose the three little dots in the corner of the playground window, and then choose “Show API Requests.” This way, you can see the equivalent AWS Command Line Interface (AWS CLI) commands for the actions you performed in the console.

To use Stable Image Core or Stable Diffusion 3 Large, you can replace the model ID.

The previous command outputs the image in Base64 format inside a JSON object in a text file.

To get the image in a single command, write the output JSON file to standard output and use the jq tool to extract the encoded image so that it can be decoded on the fly. The output is written to the img.png file. The complete command is:

Using Stable Image Ultra with the AWS SDK

This article describes how to use Stable Image Ultra with the AWS SDK for Python (Boto3). This simple application interactively asks for a text-to-image prompt and calls Amazon Bedrock to generate an image.

import base64 import boto3 import json import os MODEL_ID = “stability.stable-image-ultra-v1:0” bedrock_runtime = boto3.client(“bedrock-runtime”, region_name=”us-west-2″) print(“Enter prompt for text-to-image model:”) prompt = input() body = { “prompt”: prompt, “mode”: “text-to-image” } response = bedrock_runtime.invoke_model(modelId=MODEL_ID, body=json.dumps(body)) model_response = json.loads(response(“body”).read()) base64_image_data = model_response(“images”)(0) i, output_dir = 1, “output” if not os.path.exists(output_dir): os.makedirs(output_dir) while os.path.exists(os.path.join(output_dir, f”img_{i}.png”)): i += 1 image_data = base64.b64decode(base64_image_data) image_path = os.path.join(output_dir, f”img_{i}.png”) with open(image_path, “wb”) as file: file.write(image_data) print(f”The generated image was saved to {image_path}”)

The application writes the resulting images to an output directory, which is created if it does not exist. To avoid overwriting existing files, the code checks for existing files to find the first available filename in the img_.png format.

Further examples of how to use the stable diffusion model can be found in the AWS Documentation Code Library.

Customer testimonials

Learn from Ken Hoge, Director of Global Alliances at Stability AI, how Stable Diffusion models are transforming industries from text to images, video, audio, and 3D, and how Amazon Bedrock is empowering customers with an all-in-one, secure, and scalable solution.

Join Nicolette Han, Product Owner at Stride Learning, as they step into a world where reading comes alive. With the support of Amazon Bedrock and AWS, Stride Learning’s Legend Library is using AI to create engaging, safe illustrations for children’s stories, transforming the way young people engage with and understand literature.

What you need to know

The new Stability AI models, Stable Image Ultra, Stable Diffusion 3 Large, and Stable Image Core, are available today in Amazon Bedrock in the US West (Oregon) AWS Region. With this launch, Amazon Bedrock offers a broader range of solutions to enhance creativity and accelerate content generation workflows. To understand the cost for your use case, see the Amazon Bedrock pricing page.

For more information about Stable Diffusion 3, check out our research paper which details the underlying technology.

To get started, check out the Stability AI Models section of the Amazon Bedrock User Guide, and to see how others are using generative AI in their solutions and learn with in-depth technical content, visit community.aws.

– Danilo Jul 23 ’13 at 18:45