BLOOMINGTON, Ind. — Funded by a $7.5 million grant from the U.S. Department of Defense, Indiana University researchers will lead a multi-institutional team of experts in fields including information science, psychology, communications and folklore to evaluate the role artificial intelligence plays in intensifying the influence of online communications, including misinformation and extremist messages.

YY Ahn. Photo by Anna Powell Denton, Indiana University.

The project is one of 30 recently funded by the department’s Interdisciplinary University Research Initiative, which supports defense-related basic research projects.

“The proliferation of misinformation and extremist messages poses a significant societal threat,” said lead investigator Yong-Yeol Ahn, a professor at Indiana University’s Rady School of Information Sciences, Computing and Engineering in Bloomington. “Now, AI introduces the potential to mine data about individuals and quickly generate targeted messages that appeal to them. In other words, we’re applying big data to the individual, which has the potential to create even greater disruption than we’ve seen before.”

He said insights from research into the interplay between AI, social media and online misinformation could help governments counter foreign influence on election campaigns and radicalization.

The five-year effort brings together experts from a wide range of fields, including psychology and cognitive science, communications, folklore and narrative, artificial intelligence and natural language processing, complex systems and network science, and neurophysiology. Six IU researchers on the project are all from the Rady School and are affiliated with IU’s Social Media Observatory. Other collaborators include media experts from Boston University, psychologists from Stanford University, and computational ethnographers from the University of California, Berkeley.

Specifically, Ahn said, the project will explore how a sociological concept called “resonance” affects people’s receptivity to certain messages. This refers to the idea that people’s opinions are more strongly influenced by material that resonates with them through emotional content or a narrative framework that appeals to their existing beliefs and cognitive biases, such as political ideologies, religious beliefs, or cultural norms.

Ahn added that resonance can be used to create messages that bridge gaps between groups as well as those that promote polarization, but AI’s ability to rapidly generate text, image and video-based content could increase the power of those messages (for better or worse) by tailoring them to people on a personal level.

“This is a basic science project. Everything we do is publicly available,” Ahn says, “but it has many potential applications, from understanding the role of AI in misinformation and disinformation campaigns, such as foreign influence on elections, to topics like how to foster trust in AI in the same way that a pilot trusts the reliability of an AI navigation system. There are many important questions about AI that depend on understanding the intersection of AI and basic psychological theory.”

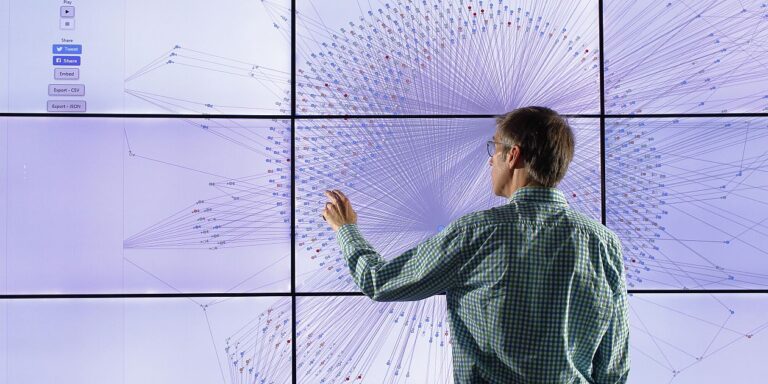

He added that the team will also be using AI techniques to support their research. Using AI to create “model agents” — virtual people who share information and react to messages within the simulation — will allow the researchers to more accurately model the flow of information between groups and the impact of information on the “people” in the model, he said.

He also said that the team plans to use heart rate monitors and other tools to study real people’s physical responses to online information, both AI-generated and non-AI-generated, to better understand the effects of “resonance.”

“There have been a lot of great developments in the model agency field over the last few years,” Ang said.

Other researchers have successfully created model agents that, for example, “debate” with each other in a virtual space and measured the impact that debate has on simulated opinions.

Ahn added that the IU-led team’s research will be “significantly different” from other attempts to model belief systems by simulating people’s opinions. The project will apply “a complex network of interacting beliefs and concepts integrated with social contagion theory” to create a “holistic and dynamic model of multi-layered belief resonance.” The approach is outlined in a paper published in the journal Science Advances.

The result would be a system that more closely resembles the complexities of reality, where people’s opinions are based not simply on party affiliation but on a complex intersection of belief systems and social dynamics. For example, Ahn said, a person’s attitudes toward their social groups and the health care industry may better predict opinions about vaccine safety than political ideology.

The IU co-principal investigators on the project are Assistant Professor Jisun Ahn, Professor Alessandro Flamini, Assistant Professor Gregory Lewis and Radix Distinguished Professor Filippo Menzer from the Radix School in Bloomington. Professor Lewis is also an assistant research scientist at the Kinsey Institute. Associate Professor Haeun Kwak from the Radix School serves as senior staff member.

Other co-principal investigators on the grant are Betsy Grabe of Boston University, Madalina Vlaseanu of Stanford University, and Timothy Tangerlini of the University of California, Berkeley. The research project also involves several doctoral and undergraduate students from Indiana University.