OKLAHOMA CITY (AP) — As police Sergeant Matt Gilmore and his police dog, Gunner, searched for a group of suspects for nearly an hour, their body cameras recorded every word uttered and every bark.

Typically, the Oklahoma City police sergeant would grab his laptop and spend another 30 to 45 minutes writing up a report on the search. But this time, artificial intelligence Write the first draft.

From all the sounds and radio communication picked up by the microphone attached to Gilmore’s body camera, the AI tool generated a report in eight seconds.

“It was much better than the report I wrote, 100 percent accurate, and it flowed well,” Gilmore said.The report even recorded something he didn’t remember hearing – another officer mentioning the color of the car the suspect fled in.

The Oklahoma City Police Department is one of a handful of departments experimenting with an AI chatbot to write first drafts of incident reports. While officers who have tried it have enthusiastically embraced the time-saving technology, some prosecutors, police watchdog groups, and legal scholars worry it could change the foundational document of the criminal justice system that determines who gets charged and who gets jailed.

Built with the same technology as ChatGPT and sold by Axon, a company best known for developing Tasers and being a major U.S. supplier of body cameras, the product could be what Gilmore calls another “game changer” in policing.

“People become police officers because they want to do police work, and spending half their day entering data is a boring part of the job they hate,” said Axon founder and CEO Rick Smith, who added that the new AI product, called DraftOne, has received “the most positive response” of any product the company has launched so far.

“Certainly, there are concerns,” Smith added. In particular, district attorneys who prosecute criminal cases want to make sure officers, and not just AI chatbots, are responsible for writing the reports, since they may have to testify in court about incidents they witnessed, he said.

“The last thing the prosecution wants is to have an officer on the stand saying, ‘An AI wrote that, I didn’t write that,'” Smith said.

AI technology is nothing new to police agencies, which have deployed algorithmic tools to read license plates. Recognize the face of a suspect, Detecting gunshots predict where crimes are likely to occur. Many of these applications come with privacy and civil rights concerns. Attempts by Legislators Safeguards need to be put in place, but because the introduction of AI-generated police reports is so new, there are few to no guidelines to guide their use.

Concerns about racial prejudice and discrimination in society Incorporated into AI technology Those are just some of the reasons why Aurelius Francisco, a community activist in Oklahoma City, finds the new tool, which he learned about from The Associated Press, “deeply puzzling.” Francisco prefers to write his own name in lowercase as a tactic of resistance to professionalism.

“The fact that this technology is being used by the same company that supplies police with Tasers is disturbing enough,” said Francisco, co-founder of the Mind Liberation Foundation in Oklahoma City.

He said automating such reports “reduces police’s ability to harass, surveil and abuse our communities — making police jobs easier but making lives harder for Black and brown people.”

Before testing the tool in Oklahoma City, police officials showed it to local prosecutors, who advised them to use caution before using it in serious criminal cases. For now, the tool is only being used to report minor incidents that don’t result in an arrest.

“So we’ve got no arrests, no felonies, no violent crimes,” said Capt. Jason Bussert, director of information technology for the 1,170-officer Oklahoma City Police Department.

That’s not the case in Lafayette, Indiana, where Police Chief Scott Galloway told The Associated Press that all of his officers can use Draft One for any incident and that it has been “amazingly well-received” since a pilot began earlier this year.

Or in Fort Collins, Colorado, police Sergeant Robert Younger said officers are free to use the technology for any type of call, but they found it didn’t work well when patrolling the city’s downtown bar district because of “overwhelming noise.”

Axon experimented with using AI to analyze and summarize audio recordings, as well as computer vision to summarize what’s “seeing” in video footage, but quickly realized the technology wasn’t ready yet.

“Given all the sensitive issues around policing, race and other identities of those involved, I think that really needs to be addressed before we deploy it,” said Axon CEO Smith, who described some of the responses tested as not “overtly racist” but insensitive in other ways.

Those experiments led Axon to put an emphasis on audio in the products it unveiled at its annual internal conference for law enforcement officials in April.

The technology leverages the same generative AI model as ChatGPT, developed by San Francisco-based OpenAI, which is a close business partner with Microsoft, Axon’s cloud computing provider.

“We use the same underlying technology as ChatGPT, but we have access to more knobs and dials than actual ChatGPT users have,” said Noah Spitzer-Williams, who manages AI products at Axon. Turning down the “creativity dial” makes the model stick to the facts and “it doesn’t exaggerate or hallucinate as much as it would if we used ChatGPT alone,” he said.

Axon didn’t disclose how many police departments are using its technology — it’s not the only vendor, and startups like Policerreports.ai and Truleo are also pitching similar products — but Axon’s deep relationships with departments that buy its Tasers and body cameras mean experts and law enforcement officials expect AI-generated reports to become more prevalent in the coming months and years.

Before that happens, legal scholar Andrew Ferguson would like to see a more public debate about its benefits and potential harms. First, the problem with the large-scale language models behind AI chatbots is that they tend to produce misinformation. Known as hallucinations It is possible to make convincing and difficult-to-detect lies in a police report.

“I worry that automation and the ease of the technology will cause officers to pay less attention to sentence construction,” said Ferguson, a law professor at American University who is writing the first law review article on the emerging technology.

Ferguson said police reports are important in determining whether an officer’s suspicions “justify the loss of someone’s liberty” and can sometimes be the only testimony a judge sees, especially in minor offense cases.

Ferguson said human-generated police reports also have flaws, but it’s an open question as to which is more reliable.

Some officers who have tried it say it’s already changed the way they respond to reported crimes: Because officers narrate what’s happening, the camera better captures what they want to document.

As technology becomes more widespread, Bassert predicts officers will “use more and more words” to describe what they see.

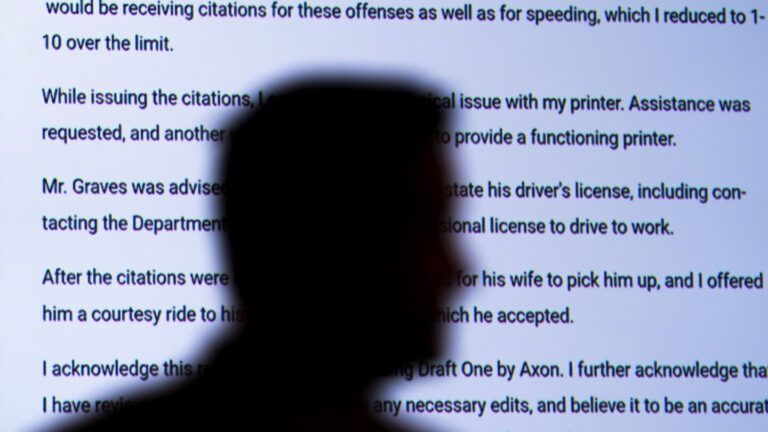

Bussert loaded the traffic stop video into the system, pressed a button, and the program created a conversational, narrated-style report based on the audio from the body camera, complete with dates and times, just as the officer had typed it from his notes.

“It was literally a matter of seconds,” Gilmore said, “and I was like, ‘I don’t need to change anything.'”

At the end of the report, the agent must click a box indicating that the report was generated using AI.

—————

O’Brien reported from Providence, Rhode Island.

—————

The Associated Press and OpenAI License and Technology Agreements This gives OpenAI access to parts of the AP’s text archive.