Editor’s note: This post is part of our “AI Decoded” series, which demystifies AI by making the technology more accessible and introducing new hardware, software, tools, and acceleration for RTX PC and workstation users.

This week at Gamescom, NVIDIA announced that NVIDIA ACE, a suite of technologies that brings digital humans to life with generative AI, now includes the company’s first on-device small language model (SLM) powered locally by RTX AI.

Called Nemotron-4 4B Instruct, the model features better role-playing, search-enhanced generation and function calling capabilities, allowing game characters to better intuitively understand player instructions, respond to gamers and take more precise and appropriate actions.

Available as an NVIDIA NIM microservice for game developers to deploy on cloud and device, the model is optimized for low memory usage and faster response times, enabling developers to take advantage of more than 100 million GeForce RTX-powered PCs and laptops, and NVIDIA RTX-powered workstations.

Benefits of SLM

The accuracy and performance of an AI model depends on the size and quality of the dataset used to train it. Large language models are trained on vast amounts of data, which are typically general-purpose and contain excessive information for most uses.

SLM, on the other hand, is focused on a specific use case, so it can provide more accurate responses faster, even with less data – a key element for having a natural conversation with a digital human.

Nemotron-4 4B was first derived from the larger Nemotron-4 15B LLM. In this process, a smaller model, called the “student,” must mimic the output of a larger model, called the “teacher.” In this process, unimportant outputs of the student model are reduced or removed, reducing the model’s parameter size. The SLM is then quantized, reducing the precision of the model weights.

With fewer parameters and less precision, Nemotron-4 4B has a smaller memory footprint and faster time to first token (speed of response onset) than the larger Nemotron-4 LLM, while maintaining a high level of accuracy through distillation. The smaller memory footprint means games and apps that integrate NIM microservices can run locally on many more GeForce RTX AI PCs and laptops and NVIDIA RTX AI workstations owned by consumers today.

This new optimized SLM has also been specially built with instruction tuning, a technique that fine-tunes models based on instruction prompts to better perform specific tasks. This can be seen in Mecha BREAK, a video game where players can converse with a mechanic game character and give instructions to switch and customize their mecha.

ACEs Up

ACE NIM microservices enable developers to deploy cutting-edge generative AI models through the cloud or RTX AI PCs and workstations to infuse AI into games and applications. ACE NIM microservices enable non-playable characters (NPCs) to dynamically interact and converse with players in-game in real-time.

ACE consists of key AI models for speech-to-text, language, text-to-speech and facial animation, and is modular, allowing developers to choose the NIM microservices they need for each element of a particular process.

NVIDIA Riva Automatic Speech Recognition (ASR) processes a user’s spoken words and uses AI to produce highly accurate transcriptions in real time. The technology uses GPU-accelerated multilingual speech and translation microservices to build a fully customizable conversational AI pipeline. Other supported ASRs include OpenAI’s Whisper, an open-source neural net that approaches human-level robustness and accuracy in English speech recognition.

Once converted to digital text, the transcription is sent to an LLM (such as Google’s Gemma, Meta’s Llama 3, or NVIDIA Nemotron-4 4B) which starts generating responses to the user’s original voice input.

Text-to-speech, another of Riva’s technologies, then generates the voice response. ElevenLabs’ proprietary AI voice technology is also supported and is being demoed as part of ACE, as seen in the demo above.

Finally, NVIDIA Audio2Face (A2F) generates facial expressions that can be synchronized with speech in many languages. This microservice enables digital avatars to express dynamic, realistic emotions that can be streamed live or baked in in post-processing.

The AI network automatically animates face, eyes, mouth, tongue and head movements to match the range and intensity level of the emotion you select, and A2F can also automatically infer emotions directly from audio clips.

Finally, the complete character or digital human is animated in a renderer such as Unreal Engine or the NVIDIA Omniverse platform.

NIMble’s AI

In addition to modular support for a range of NVIDIA-powered and third-party AI models, ACE enables developers to run inference for each model in the cloud or locally on RTX AI PCs and workstations.

The NVIDIA AI Inference Manager software development kit enables hybrid inference based on different needs, including experience, workload, and cost. It streamlines the deployment and integration of AI models for PC application developers by pre-configuring the PC with the required AI models, engines, and dependencies. Apps and games can seamlessly scale inference from the PC or workstation to the cloud.

ACE NIM microservices run locally on RTX AI PCs and workstations as well as in the cloud. Microservices currently running locally include Audio2Face in the Covert Protocol tech demo and Mecha BREAK’s new Nemotron-4 4B Instruct and Whisper ASR.

to infinity and beyond

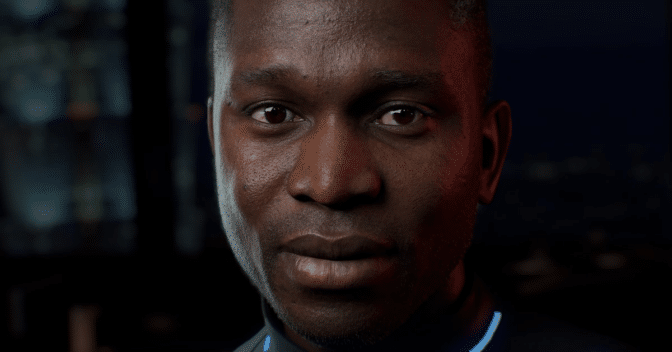

Digital humans are much more than in-game NPCs. At last month’s SIGGRAPH conference, NVIDIA previewed “James,” an interactive digital human that can connect with people using emotion, humor, and more. James is based on a customer service workflow using ACE.

Decades of change in the way humans and technology communicate have ultimately led to the birth of digital humans. The future of human-computer interfaces will have friendly faces and will require no physical input.

Digital humans facilitate more engaging and natural interactions. According to Gartner, by 2025, 80% of conversational services will incorporate generative AI, and 75% of customer-facing applications will incorporate conversational AI with empathy. Beyond gaming, digital humans will transform multiple industries and use cases, including customer service, healthcare, retail, telepresence, and robotics.

Users can get a glimpse of this future right now by interacting with James in real time at ai.nvidia.com.

Generative AI is transforming games, video conferencing, and interactive experiences of all kinds. Stay up to date on the latest and next trends by subscribing to the AI Decoded newsletter.