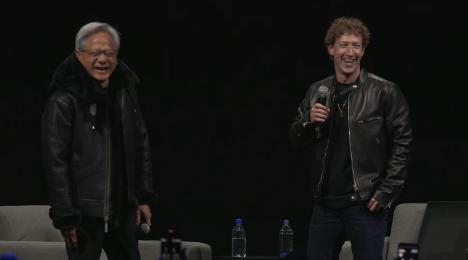

NVIDIA CEO Jensen Huang and Meta CEO Mark Zuckerberg exchange jackets and laugh on stage

NVIDIA

SIGGRAPH is the graphics industry’s premier conference and a great place for analysts like me and those trying to predict the future of graphics. The show is heavily research-focused, with many researchers, architects, and engineers from across the graphics industry. Two companies that had a dominant presence at the show were Meta and Nvidia. I reported on Nvidia’s dominance last year, but also talked about new research projects from other companies in the industry, especially Meta.

Nvidia: More NIM and OpenUSD

Nvidia has been a major contributor to the OpenUSD standard for many years and sees it as one of the key file formats for enabling cross-platform XR applications. The USD file format was originally created by Pixar but was eventually open-sourced to become OpenUSD. Today, OpenUSD is used by Nvidia and many more ISV partners to collaborate and share projects and ideas. OpenUSD is also the foundation for Nvidia’s Omniverse cloud platform, which is one of the main reasons Nvidia is committed to OpenUSD.

At Siggraph 2024, Nvidia announced a set of NIM microservices for generative AI to create agents and copilots within the USD workflow. NIMs are a set of optimized cloud-native microservices designed to help developers accelerate time to market by simplifying the deployment of generative AI models. These include USD Code NIM, USD Search NIM, and USD Validate NIM, which perform exactly the types of tasks specified in their names. Most of these new microservices are designed to make USD easier to use and to power applications such as USD Search. We think USD Search will be one of the most attractive NIMs for developers because finding 3D assets is very difficult. Finding USD or 3D assets using natural language or images can be very useful in creative and engineering applications.

In addition to the USD NIM currently available in preview, Nvidia also announced the upcoming USD Layout NIM and USD SmartMaterial NIM. Layout allows you to assemble OpenUSD-based scenes from a series of text prompts, while SmartMaterial allows you to predictively apply realistic materials to CAD objects. Many of these NIMs simplify the use of 3D tools while at the same time tying developers and users more closely to Nvidia, building a dependency on the NIM in addition to Omniverse lock-in.

Nvidia announced three new fVDBs (Framework Volume Data Blocks) as part of the larger NIM release. fVDBs are built on OpenVDB, an open-source, industry-standard library for simulating and rendering sparse volumetric data such as water, fire, smoke, and clouds. The “framework” in fVDB refers to a deep learning framework for generating AI-enabled virtual representations of the real world. According to Nvidia, these new fVDBs have four times the spatial scale and run almost four times faster than previous frameworks.

The fVDB Mesh Generation NIM generates OpenUSD based meshes from point cloud data using the Omniverse Cloud API to build 3D environments that represent the real world. The fVDB Physics Super Resolution NIM performs super-resolution upscaling on a frame or sequence of frames to generate a high-resolution OpenUSD based physics simulation. Finally, the fVDB NeRF-XL NIM helps you create large-scale NeRFs (Neural Radiation Fields) in OpenUSD using the Omniverse Cloud API. NeRFs are a great way to quickly and easily build 3D environments using simple 2D image capture. This is very similar to Varjo’s Teleport tool that we introduced in our AWE 2024 wrap-up post.

A virtual representation of a Foxconn factory in Omniverse

NVIDIA

At the conference, Nvidia provided a real-world example to validate some of the work it’s doing with Omniverse and NIM, noting that Foxconn, which has more than 170 factories around the world, is using Omniverse and NIM to train robots in its newest factory in Guadalajara, Mexico, using a digital twin powered by Omniverse and Isaac, Nvidia’s AI training suite. Nvidia also noted how WPP, one of the world’s leading advertising agencies, has already adopted USD Search and USD Code NIM to use generative AI-enabled content creation in workflows for clients like Coca-Cola.

Generative AI Coca-Cola ad concept created with Omniverse

NVIDIA

In addition to the many NIMs that NVIDIA has introduced, they also announced USD Connectors to bring generative AI to more industries. These Connectors are a way for apps to access the Omniverse platform, helping to convert different apps to USD and standardize across different platforms such as Maya, Blender, Houdini, 3ds Max, Rhino, Unity, and Unreal Engine.

This year, Nvidia added connectors for Siemens’ Simcenter suite and the Unified Robotics Description Format to the list of apps with USD connectors, both of which should help increase adoption of USD and Omniverse among Nvidia’s partners. Nvidia wants to make USD even more open with its OpenUSD Exchange software development kit, which enables developers to build their own robust OpenUSD data connectors. The Alliance for OpenUSD is also growing, adding six new members and releasing a new version of OpenUSD (version 24.08).

Meta unveils new model

At Siggraph, Meta announced the SAM 2 open source AI model (in this context, SAM stands for “segmentation model for anything”). SAM 2 is an improvement over SAM in that it works faster than SAM and can now support video as well as still images. The model can track objects in real time regardless of the content, making it usable for creative and industrial applications. It may also improve the quality of video editing applications that adopt this model. This will make it easier and cheaper for Meta to edit their own content, as more hardware and platforms will be optimized for this model. I tried a demo of SAM2 on Meta’s site, and the creative applications are endless, as well as for business and educational purposes. Meta claims that SAM2 outperforms all the best models in the field in segmenting objects in both video and images.

Block diagram showing how Meta’s SAM 2 works

Meta

To support SAM 2 models, Meta has also released the SA-V dataset for training generic segmentation models from open-world videos, from places and objects to entire real-world scenes. This makes the dataset more applicable to real-world video applications where users might apply SAM 2. SA-V consists of 51,000 diverse videos and 643,000 spatio-temporal segmentation masks, which Meta calls the “market.” Meta has shared many details about how the dataset was created, labeled, and validated:

It will be interesting to see which companies and countries will use the SAM 2 and SA-V datasets for their own models based on SAM 2. With the high cost of developing AI models and the limited availability of datasets, many countries are using Meta’s models to power their own AI models. Considering that Meta only recently released Llama 3.1, it is clear that Meta is on a mission to continually produce better and more efficient models so that AI continues to grow and developers can have access to best-in-class models for their applications.

At the event, Meta CEO Mark Zuckerberg also spoke about Meta’s AI Studio, which enables individuals and businesses to create their own custom AI. Among other things, this allows users to create AI in their own image, or to create AI to solve specific problems using specific domain knowledge.

During a fireside chat at SIGGRAPH, Zuckerberg and Nvidia CEO Jensen Huang discussed how creators and artists could use these customized AIs to create content for their fans in their own style and then charge for it as their own service. They also discussed how powerful custom AI assistants could be used for customer service applications to help small businesses easily respond to their customers’ needs. These assistants could also be used at scale for businesses with their own unique datasets and knowledge bases specific to each business.

Generative AI continues to evolve graphics

Both Nvidia and Meta are major contributors to Siggraph and the graphics industry, so it’s no surprise that both companies made some big announcements at the show. As usual, it was great to see Nvidia unveil its next-generation RTX GPUs at the show, but this year Nvidia’s contributions were heavily focused on software enablement via OpenUSD, NIM, and Omniverse.

On Meta’s side, AI Studio and the SAM 2 model demonstrate Meta’s continued leadership in AI and how important the company remains to the industry. We’re excited to see how SAM 2 is commercialized, as it seems to be one of the best models for masking, an incredibly popular AI-accelerated tool in video editing apps.

Some industry observers claim that the AI gold rush is over and the AI hype bubble has already burst, but judging by recent announcements from Meta and Nvidia, things seem to continue to move at a very fast pace.