Before the start of the Hot Chips 2024 trade show, Nvidia showed off more elements of the Blackwell platform, including server installation and configuration, a not-so-subtle understatement that Blackwell is still coming (never mind the delays). They also discussed their existing Hopper H200 solution, showed FP4 LLM optimization with the new Quasar Quantization System, discussed warm water liquid cooling for data centers, and even talked about using AI to build even better chips for AI, reiterating that Blackwell is not just a GPU, it’s a complete platform and ecosystem.

We already know a lot of what Nvidia will announce at Hot Chips 2024. For example, its data center and AI roadmaps show Blackwell Ultra coming next year, Vera CPUs and Rubin GPUs coming in 2026, followed by Vera Ultra in 2027. Nvidia first confirmed these details at Computex in June, but AI remains a big topic, and Nvidia is happy to continue to promote it.

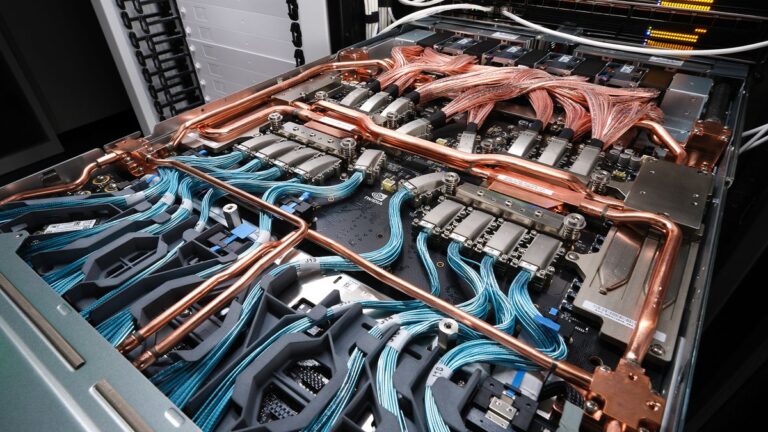

Blackwell is reportedly three months delayed, but Nvidia has neither confirmed nor denied that information, instead releasing images of a Blackwell system installation, as well as photos and renders detailing the internal hardware of the Blackwell GB200 rack and NVLink switch. There’s not much to say other than the hardware appears to consume a lot of power and have fairly impressive cooling capabilities. It also looks very expensive.

Nvidia also showed some performance results of the existing H200 running with and without NVSwitch. The company claims that inference workloads perform up to 1.5x better than running a point-to-point design (using the Llama 3.1 70B parameter model). Blackwell doubles the NVLink bandwidth for further improvements, with the NVLink switch tray providing a total of 14.4TB/s of aggregate bandwidth.

As datacenter power demands continue to grow, Nvidia is also working with partners to improve performance and efficiency. One promising outcome is the use of hot water cooling, which can then be recirculated for heating, further reducing costs. Nvidia claims that using this technology has reduced datacenter power usage by up to 28%, with the majority of this coming from the elimination of sub-ambient cooling hardware.

Above is the full slide deck from Nvidia’s presentation: There are a few other interesting items worth noting:

In preparation for Blackwell, which added native FP4 support for even better performance, Nvidia has been working to ensure that modern software can benefit from new hardware features without sacrificing accuracy. After using the Quasar Quantization System to tune the results of the workload, Nvidia was able to achieve essentially the same quality as FP16 while using a quarter of the bandwidth. The two resulting rabbit images may be slightly different, but this is common for text-to-image conversion tools such as Stable Diffusion.

Nvidia also announced that it will use AI tools to design better chips. So talk about AI building AI. Nvidia created its own in-house LLM to help it design, debug, analyze and optimize faster. It works with the Verilog language used to describe circuits, and was a key element in creating the Blackwell B200 GPU, which has 208 billion transistors. This will be used to create even better models so Nvidia can work on the next generation of Rubin GPUs and beyond. (Feel free to insert your own Skynet jokes at this point.)

Taken together, we now have a higher quality picture of Nvidia’s AI roadmap for the next few years, which again defines the “Rubin platform” with switches and interlinks as a whole package. Nvidia is expected to go into more detail about the Blackwell architecture, its use of generative AI for computer-aided engineering, and liquid cooling at its Hot Chips conference next week.