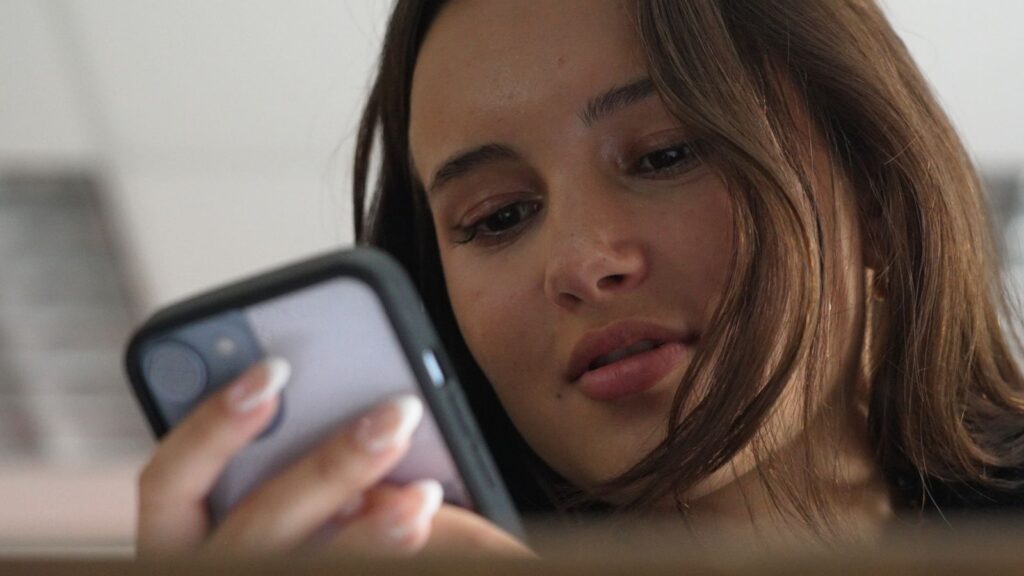

The Disney Channel child star told Sky News she “broke down in tears” after learning predators had used artificial intelligence (AI) to create sexual abuse images of her.

Sixteen-year-old Kaylin Heyman returned home from school one day to receive a call from the FBI, where agents told her that a man living thousands of miles away had sexually assaulted her without her knowledge.

Investigators said Kaylin’s face was superimposed with an image of an adult engaged in a sexual act.

“I broke down in tears when I heard,” Kaylin said. “I feel like my privacy has been violated. It’s surreal that strangers would see me like this.”

Kailyn appeared on the Disney Channel television series “Just Roll With It” for several seasons and was a victim along with other child actors.

“My innocence was taken from me in that moment,” she added. “I was a 12-year-old girl in that footage so it was heartbreaking to say the least. I felt so alone because I had no idea this was a real crime that was happening in the world.”

But Kaylin’s story is far from unique: According to statistics from the US National Center for Missing and Exploited Children (NCMEC), there were 4,700 reports of child sexual exploitation images and videos created by generative AI last year.

AI-generated child sexual abuse images are so realistic that law enforcement experts are forced to spend countless unsettling hours trying to distinguish which of these images are computer-simulated and which show real victims.

That’s the job of investigators like Terry Dobrosky, a cybercrimes expert in Ventura County, California.

“The AI-generated material we have today is so lifelike that it’s disturbing,” he said. “Someone could argue in court, ‘Oh, I believed that was AI-generated. I didn’t believe it was a real child, so I’m not guilty.’ This is undermining our current law, and it’s very worrying.”

Sky News was granted rare access to the heart of the Ventura County Cyber Crimes Investigation Team.

Dobroski, the DA investigator, showed me some of the message boards he monitors on the dark web.

“We have a guy here,” he says, pointing at the computer screen, “who goes by the name ‘I Love Little Girls,’ and his comment is that the quality of the AI is getting so good. Another guy said he’s happy that the AI has helped him with his addiction. It’s more like it’s reinforcing the addiction, not a way to overcome it.”

Read more from Sky News:

Robert F. Kennedy Jr. declared dead

Condemns ‘staggering’ violence in UK riots

New areas of interest in homicide investigations

The use of artificial intelligence to create and consume sexualized imagery isn’t just happening on the dark web: There have also been cases in schools of children taking photos of their classmates from social media and using AI to superimpose them onto naked bodies.

Five 13- and 14-year-old students were expelled from a school in Beverly Hills, Los Angeles, after doing just that, sparking a police investigation.

However, some states, such as California, have not yet made it a crime to use AI to create child sexual abuse imagery.

Ventura County Deputy District Attorney Rico Kelly is trying to change that with a proposal to introduce new legislation.

“This technology is so easily accessible that even middle school students (ages 10 to 14) can use it in ways that are traumatizing to their peers,” she said. “It’s really alarming because it’s so easily accessible and, if it gets into the wrong hands, it has the potential to cause irreparable harm.”

“We’re not trying to desensitize the public to child sexual abuse,” she added, “and using this technology in this way does that.”