Developers will have access to new NVIDIA NIM microservices for robot simulation in Isaac Lab and Isaac Sim, OSMO robot cloud computing orchestration services, teleoperated data capture workflows and more.

SIGGRAPH — To accelerate humanoid development on a global scale, NVIDIA today announced it is offering the world’s leading robotics manufacturers, AI model developers and software makers a set of services, models and computing platforms to develop, train and build the next generation of humanoid robots.

Services offered include new NVIDIA NIM™ microservices and frameworks for robotic simulation and training, NVIDIA OSMO orchestration services for running multi-stage robotics workloads, and AI- and simulation-enabled teleoperation workflows that allow developers to train robots using small amounts of human demonstration data.

“The next wave of AI is robotics, and one of the most exciting developments is humanoid robots,” said Jensen Huang, founder and CEO of NVIDIA. “We’re advancing NVIDIA’s entire robotics stack to ensure humanoid developers and companies around the world have access to the platform, acceleration libraries and AI models that best suit their needs.”

Accelerate Development with NVIDIA NIM and OSMO

The NIM microservices provide pre-built containers with NVIDIA inference software, helping developers reduce deployment time from weeks to minutes. Two new AI microservices enable robotics engineers to enhance their generative physics AI simulation workflows with NVIDIA Isaac Sim™, a reference application for robot simulation built on the NVIDIA Omniverse™ platform.

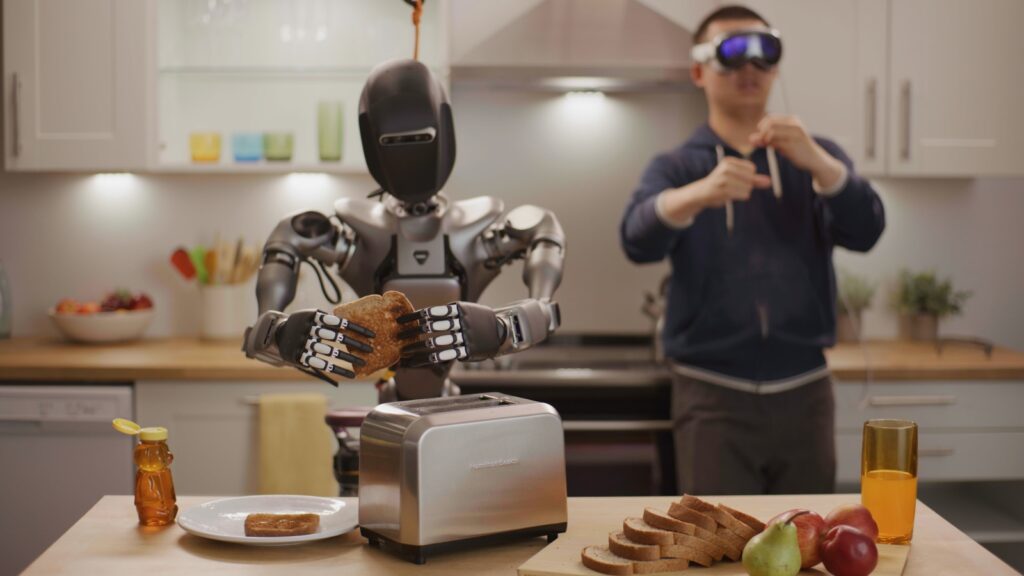

The MimicGen NIM microservice generates synthetic motion data based on teleoperation data recorded from spatial computing devices such as the Apple Vision Pro, while the Robocasa NIM microservice generates robot tasks and simulation-enabled environments in OpenUSD, a universal framework for development and collaboration in 3D worlds.

Available now, NVIDIA OSMO is a cloud-native, managed service that can orchestrate and scale complex robotics development workflows across distributed computing resources, whether on-premise or in the cloud.

OSMO dramatically simplifies robot training and simulation workflows, reducing deployment and development cycle times from months to less than a week. Users can visualize and manage a wide range of tasks, including generating synthetic data for humanoids, autonomous mobile robots, and industrial manipulators, training models, conducting reinforcement learning, and implementing large-scale software-in-the-loop testing.

Evolving Data Capture Workflows for Humanoid Robot Developers

Training the underlying models of humanoid robots requires vast amounts of data, and one way to obtain human demonstration data is through teleoperation, but this is becoming an increasingly costly and time-consuming process.

Demonstrated at the SIGGRAPH Computer Graphics conference, the NVIDIA AI- and Omniverse-enabled teleoperation reference workflow enables researchers and AI developers to generate large amounts of synthetic motion and perception data from remotely captured, minimal-human demonstrations.

First, developers use Apple Vision Pro to capture a small number of remote control demonstrations, then simulate the recordings with NVIDIA Isaac Sim and generate a synthetic dataset from the recordings using the MimicGen NIM microservice.

Developers train the Project GR00T humanoid foundation model using real and synthetic data, saving time and cost, and then generate experiences to retrain the robot model using Isaac Lab’s Robocasa NIM microservices, a robotics learning framework. Throughout the workflow, NVIDIA OSMO seamlessly allocates computing jobs to different resources, saving developers weeks of administrative tasks.

General-purpose robotics platform company Fourier has seen the benefits of using simulation techniques to synthetically generate training data.

“Developing humanoid robots is extremely complex and requires vast amounts of real-world data painstakingly collected from the real world,” said Alex Gu, CEO of Fourier. “NVIDIA’s new simulation and generative AI developer tools will help launch and accelerate model development workflows.”

NVIDIA Expands Access to Technology for Humanoid Developers

NVIDIA offers three computing platforms to facilitate the development of humanoid robots: the NVIDIA AI supercomputer for training models, NVIDIA Isaac Sim built on Omniverse where robots can learn and hone their skills in simulated worlds, and the NVIDIA Jetson™ Thor humanoid robotics computer to run the models. Developers can access and use all or any part of the platform to meet their specific needs.

Through the new NVIDIA Humanoid Robot Developer Program, developers will have early access to new products and the latest releases of NVIDIA Isaac Sim, NVIDIA Isaac Lab, Jetson Thor and Project GR00T generic humanoid foundation models.

1x, Boston Dynamics, ByteDance Research, Field AI, Figure, Fourier, Galbot, LimX Dynamics, Mentee, Neura Robotics, RobotEra and Skild AI are among the first companies to join the early access program.

“Boston Dynamics and NVIDIA have worked closely for many years to push the boundaries of what’s possible in robotics,” said Aaron Sanders, chief technology officer at Boston Dynamics. “We’re excited to see the results of this work accelerate the entire industry, and the early access program is a great way to gain access to best-in-class technology.”

availability

Developers can join the NVIDIA Humanoid Robot Developer Program today to get access to NVIDIA OSMO, Isaac Lab, and soon to NVIDIA NIM microservices.

Tune in to Huang at SIGGRAPH, the world’s leading computer graphics conference, in Denver through August 1, to learn more about the latest in generative AI and accelerated computing.