The scent of hay and fertilizer hangs in Culpeper County, Virginia, where every three humans have cows. “We have a large farm owned by most families and a lot of forests,” says Sarah Palmery, one of the county’s 55,000 residents. “It’s a very charming small town in America,” she adds.

However, this idyllic idyllic is in the midst of the 21st century shift. Over the past few years, the county has approved the construction of seven large data center projects. It supports technology companies with a wide range of planning for artificial intelligence (AI). Within these huge structures, the computer server rows train the AI models behind chatbots such as ChatGPT and provide answers to billions of daily queries around the world.

Virginia has a major impact on construction. Each facility is likely to consume the same amount of electricity as tens of thousands of homes, which could raise costs for residents, putting a burden on the local power infrastructure beyond its capabilities. Palmery and others in the community are paying attention to the data center’s appetite for electricity, especially as Virginia is already known as the world’s data center capital. A review of the State Affairs Committee, published in December 2024, said that data centers will provide economic benefits, but that growth could double the electricity demand in Virginia within a decade.

Light bulbs have energy ratings. Why can’t AI chatbots be used?

“Where does power come from?” asks Parmelee, which maps the rise of the state’s data centers, working on the Piedmont Environmental Council, a nonprofit organization headquartered in Warrenton, Virginia. “They all say, ‘We’ll buy electricity from the next district.’ But the district plans to buy electricity from you. ”

Similar competitions on AI and energy are brewed in many places around the world where data centers are emerging at a record-breaking pace. Large tech companies are betting hard on generative AI. This extracts patterns from the data, but requires more energy to work compared to older AI models that don’t produce fresh text and images. This has encouraged businesses to collectively spend hundreds of billions of dollars on new data centers and servers to expand their capabilities.

From a global perspective, the impact of AI on future electricity demand is actually predicted to be relatively small. However, data centers are concentrated in dense clusters, where local impact can be deepened. They are much more spatially enriched than other energy-intensive facilities, such as steel plants and coal mines. Companies tend to build data center buildings closely, sharing power grids and cooling systems, sharing information efficiently, allowing them to transfer information to both themselves and their users. In particular, Virginia has attracted data center companies by offering tax cuts, leading to more clustering.

“If you have it, you could have more,” Parmelee says. Virginia already has 340 facilities, and Palmery maps Virginia’s 159 proposed or existing data center expansions, accounting for more than a quarter of the state’s electricity usage, according to a report from EPRI, a research institute in Paloato, California. In Ireland, data centers account for more than 20% of the country’s electricity consumption. Most of them are on the edge of Dublin. Additionally, facilities’ electricity consumption is over 10% in at least five US states.

What further complicates the issue is the lack of transparency from businesses regarding the power requirements of AI systems. “The real problem is that we have little detailed data and knowledge about what’s going on,” says Jonathan Coomey, an independent researcher who has studied energy use in computing for over 30 years and runs an analytics company in Burlingame, California.

“I think every researcher on this topic is crazy because he doesn’t have what he needs,” says Alex De Vries, a researcher at the Free University of Amsterdam and founder of Digiconomist, a Dutch company that explores the unintended consequences of digital trends. “We try our best, try all sorts of tricks and try to come up with some kind of numbers.”

Resolves AI’s energy requirements

Due to the lack of detailed figures from companies, researchers have investigated the energy needs of AI in two ways. In 2023, De Vries used a supply chain (or market-based) method.3. He looked into the power draw for one of the NVIDIA servers that dominate the generative AI market and estimated it into the energy needed for a year. He then multiplied the number by an estimate of the total number of such servers that have been shipped or needed for a particular task.

The environmental costs of generating AI are rising rapidly – and almost secrets

De Vries used this method to estimate the energy needed when Google used the generated AI. Two energy analyst companies estimated that implementing AI like ChATGPT for all Google searches would require 400,000-500,000 NVIDIA A100 servers. Second, estimating that Google handles up to 9 billion searches each day (ballfield numbers from various analysts), De Vries calculated that each request via AI server requires 7-9 watts of energy (WH). This is 23-30 times the energy of a regular search, and is based on the figures Google reported in its 2009 BlogPost (see go.nature.com/3d8sd4t). When asked to comment on De Vries’ estimates, Google did not respond.

This calculation of energy felt like “grabing a straw,” De Vries said. And his numbers quickly became obsolete. The number of servers required for AI integrated Google searches may now be lower as the current AI model may match the accuracy of the 2023 model at a part of the computational cost.

Still, the company says that the best way to assess the energy footprint of generated AI is to monitor the server’s shipping and its power requirements, which is the method used by many analysts. However, since data centers generally perform non-AI tasks, it is difficult for analysts to separate the energy used only by generated AI.

Bottom-up estimate

Another way to look at the energy needs of AI is to “bottom up.” Researchers measure the energy demand of one AI-related demand in a particular data center. However, independent researchers can perform measurements using only open source AI models that are expected to resemble their own.

The concept behind these tests is that users send prompts such as requests to generate images or text-based chats, and using a Python software package called CodeCarbon, which allows their computers to access the technical specifications of the chips that run the model in their data centers. “At the end of the run, you get an estimate of how much energy was consumed by the hardware you were using,” says Sasha Lucciioni, an AI researcher who works at Hugging Face, a New York City-based company that hosts an open source platform for AI models and datasets.

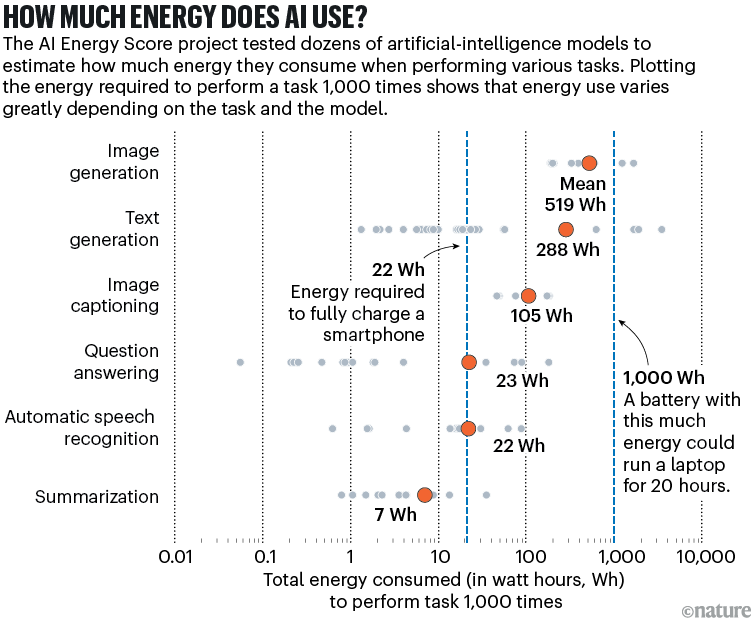

Lucciioni and others found that different tasks require different amounts of energy. On average, according to the latest results, generating an image from a text prompt consumes about 0.5 WH of energy, but producing a little less text. For comparison, the latest smartphones may require 22W to fully charge. However, there are a wide variety. Larger models require more energy (see How much energy does AI use?). de vries says the numbers are lower than his papers, but that may be because the models used by Lucciioni et al. are at least an order of magnitude smaller than the underlying model of ChatGpt, making AI more efficient.

Source: Huggingface AI Energy Score Leaderboard

That number is at the lower limit, according to Emma Strubell, a computer scientist and collaborator at Lucccioni at Carnegie Mellon University in Pittsburgh, Pennsylvania. Otherwise, “the companies will come out and fix us,” she says. “They haven’t done that.”

Additionally, companies generally withhold the information they need to estimate the energy that software will use for data center cooling. CodeCarbon also has no access to certain chip energy consumption. This includes Google’s own TPU chip, and French data scientist Benoît Courty maintains Code Carbon.

Will AI accelerate or delay the race to net-zero emissions?

Lucccioni also studies how much energy is required to train a generated AI model when a model extracts statistical patterns from a large amount of data. However, if the model receives billions of queries every day, as expected by Google’s estimates, the energy used to answer those queries (electricity in terawatt hours) controls the annual energy demand of AI. Training the size of the GPT-3, the model behind the first version of CHATGPT, requires energy in gigawatt time order.

Last month, Lucccioni and other researchers launched the AI Energy Score project, a public initiative that compares the energy efficiency of AI models on various tasks. Developers of their own closed models can also upload test results, but so far only US software company Salesforce has been involved, says Lucciioni.

Strubell said companies are getting more and more close to the energy requirements of the latest industry models. As competition grew, “information shared outside of the company has been shut down,” she says. However, companies such as Google and Microsoft have reported an increase in carbon emissions, which is attributed to the data center structure that supports AI. (Companies such as Google, Microsoft, Amazon did not address criticism about the lack of transparency when asked by nature. Instead, they emphasized that new data centers are working with local governments to ensure that new data centers do not affect the supply of local utilities.)

Currently, some governments need more transparency from businesses. In 2023, the European Union adopted the Energy Efficiency Directive. This requires operators in data centers that are rated at least 500 kilowatts of power each year.

Global Forecast

Based on how supply chains are estimated, analysts say data centers are currently using a small percentage of global electricity demand. The International Energy Agency (IEA) estimates that in 2022 the electricity used in such facilities was 240-340 TWH, or 1-1.3% of global demand (if it includes cryptocurrency mining and data movement infrastructure, this will result in a percentage of 2%).

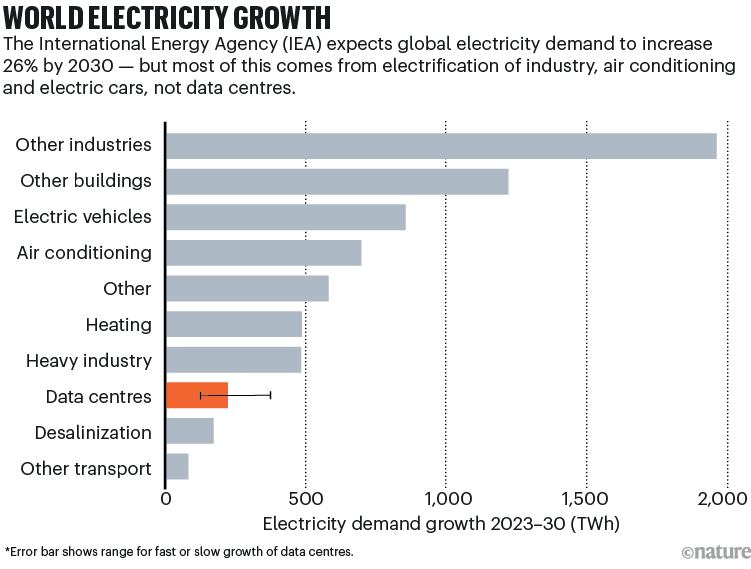

The AI boom will increase this, but with the electrification of many industries, an increase in electric vehicles and increased demand for air conditioners, the global electricity consumption is expected to increase by more than 80% by 2050, so data centers “capture a relatively small share of increased electricity demand” (see “Global Power Growth”).

Source: ref. 4

Even with an approximation of AI’s current energy demand, it is difficult to predict future trends, Koomey warns. “No one is thinking about which data centers will use either AI or traditional data centers, even years from now,” he says.

The main issue is disagreement over the number of servers and data centers needed, he says, in areas where utility companies and tech companies have economic incentives to inflate numbers. Many of their predictions are based on the “simple mind assumptions” he adds. “They estimate recent trends in the next 10 or 15 years.”

Late last year, Koomey co-authored a report funded by the US Energy 5. This estimates that US data centers currently use 176 TWH (4.4%) of national electricity, which is 2x or 3x to 7% to 12% by 2028.