Investors misinterpreted Deepseek’s AI advances, said Nvidia CEO Jensen Huang. Deepseek’s large-scale language model was built with weaker chips in January, rattling the market in January.

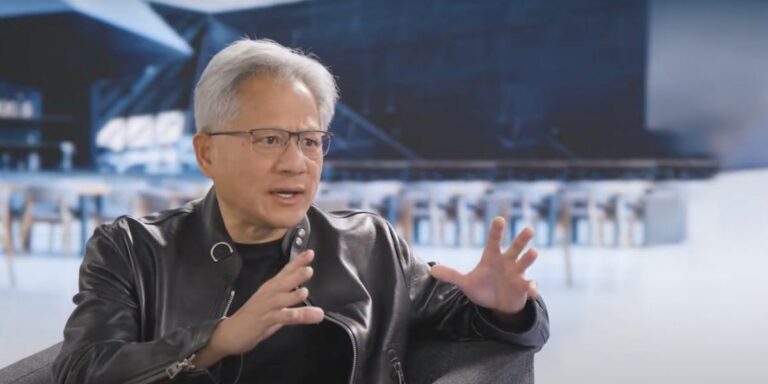

Investors have taken the wrong message from Deepseek’s advances in AI, Nvidia CEO Jensen Huang said in a virtual event aired Thursday.

Deepseek, a Chinese AI company owned by Hedge Fund High-Flyer, released a competitive open source inference model named R1 in January. The company said the large language model that underpins R1 was built with weaker chips and some of the funding of major Western-made AI models.

Investors responded to the news by selling Nvidia shares, resulting in a loss of $600 billion in market capitalization. Huang himself temporarily lost almost 20% of his net worth. The stock then recovered much of the lost value.

The dramatic market response stems from investor misunderstandings, according to a pre-recorded interview on Thursday, part of the event that debuted NVIDIA partner DDN and DDN’s new software platform, Inifinia, has been created. Ta.

Investors are questioning whether large tech companies will need trillions of spending on AI infrastructure if they don’t have the computing power needed to train their models. Jensen said the industry needs computing power in post-training methods, allowing AI models to draw conclusions and make predictions after training.

As post-training methods grow and diversify, so does the need for computing power offered by Nvidia chips, he continued.

“From an investor’s perspective, there was a mental model where the world was before training and then inference was made. And the reasoning was: You asked the AI a question and immediately gave the answer. I got it.” “I don’t know who’s fault, but that paradigm is clearly wrong.”

While it’s still important before training, after training, “it’s the most important part of intelligence. This is where you learn to solve problems,” Huang said.

Deepseek’s innovations are energizing the world of AI, he said.

“It’s very exciting. The energy around the world as a result of R1 being open-sourced is incredible,” Huang said.

An Nvidia spokesperson did not make public comments on the topic until Thursday’s event, but has addressed market responses in a written statement to similar effects.

Huang opposes growing concern that model scaling has been in trouble for months. Even before Deepseek plunged into public consciousness in January, the improvements to the model at Openai have raised doubts that the AI boom might not bring that promise.

In November, Huang emphasized that the scaling was alive and well. It simply moved from training to inference. Huang also said on Thursday that the post-training method is “really very intense” and that the model will continue to improve with new inference methods.

Huang’s Deepseek’s comments could serve as a preview of Nvidia’s first revenue call for 2025, scheduled for February 26th. Deepshek has become the topic of general discussion about corporate revenue calls across the technology spectrum, from Airbnb to Palantir.

Nvidia’s rival AMD was asked questions earlier this month, and CEO Lisa Su said Deepseek is driving innovations that are “suitable for AI adoption.”