Anna McAdams has always kept a close eye on her 15-year-old daughter Elliston Berry’s online life. So it was difficult to accept what happened that Monday morning after Homecoming 15 months ago in Aledo, Texas.

A classmate took a photo from Elliston’s Instagram, ran it through an artificial intelligence program to make it look like she was removing her dress, and sent the digitally altered image on Snapchat.

“She came into our bedroom crying and said, ‘Mom, you won’t believe what just happened,'” McAdams said.

Last year there were more than 21,000 people. Deepfake porn videos Online — more than 460% increase year over year. The manipulated contents are is proliferating On the Internet, websites are making ominous sales pitches. For example, the service “Would anyone like to take off their clothes?”

“I took the PSAT test and played a volleyball game,” Elliston said. “And the thing I have to worry about the most is having my fake nudes walking around the school. Those images have been up and circulating on Snapchat for nine months.”

In San Francisco, Chief Deputy City Attorney Yvonne Mair was beginning to hear stories similar to Elliston’s, which were shocking.

“It could very well have been my daughter,” Mia said.

The San Francisco City Attorney’s Office currently file a lawsuit Owner of 16 websites that create “deepfake nudes” that use artificial intelligence to turn non-explicit photos of adults and children into pornography.

“This case is not about technology. It’s not about AI. It’s sexual abuse,” Mia said.

The 16 sites received 200 million visits in the first six months of this year alone, according to the complaint.

City Attorney David Chiu said the 16 locations in the lawsuit are just the beginning.

“We are aware of at least 90 of these websites. So this is a big world and we need to stop them,” Chiu said.

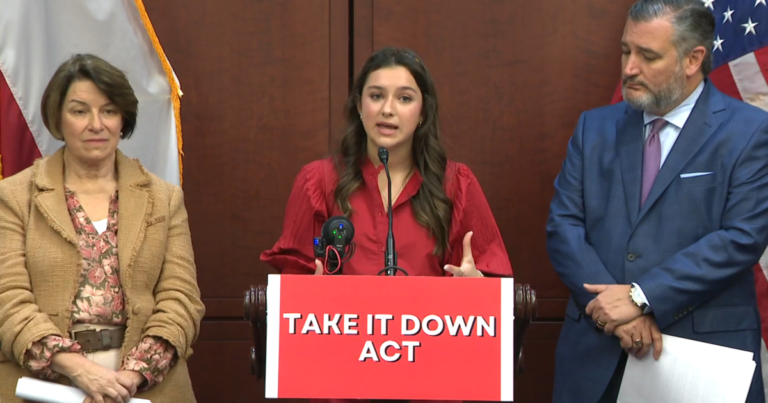

Republican Texas Sen. Ted Cruz is co-sponsoring a different attack with Democratic Minnesota Sen. Amy Klotuber. of take it down act It would require social media companies and websites to remove non-consensual pornographic images created by AI.

“This imposes a legal obligation on all technology platforms and must be removed immediately,” Cruz said.

The bill passed the Senate this month and is now attached to a larger bill. government funding bill It awaits a vote in the House of Commons.

In a statement, a Snap spokesperson told CBS News: “We care deeply about the safety and well-being of our community. Sharing nude images, whether real or AI-generated, that include minors is “This is a clear violation of our community guidelines.” We have efficient mechanisms for reporting this type of content, so we are extremely disappointed to hear from families who felt their concerns were ignored. As our latest transparency shows, we have a zero-tolerance policy towards such content. Please report it and we will take action as soon as it is reported. ”

Elliston said he is focused on the present and urging Congress to pass the bill.

“We can’t go back and undo what he did, but instead we can make sure it doesn’t happen to anyone else,” Elliston said.

more