Developing unique and promising research hypotheses is a fundamental skill for scientists. It can also take some time. New PhD candidates are likely to spend the first year of their program deciding exactly what to investigate in their experiments. What if artificial intelligence could help?

MIT researchers have created a way to autonomously generate and evaluate promising research hypotheses across disciplines through human-AI collaboration. In a new paper, they describe how this framework can be used to generate evidence-driven hypotheses tailored to unmet research needs in the field of biologically inspired materials. .

The study, published Wednesday in the journal Advanced Materials, was co-authored by Alireza Ghafarolahi, a postdoctoral fellow in the Laboratory for Atomic and Molecular Mechanics (LAMM), and Marcus Buehler, Jerry McAfee Professor of Engineering in MIT’s Department of Civil and Environmental Engineering and School of Engineering. It is. Mechanical Engineer, LAMM Director.

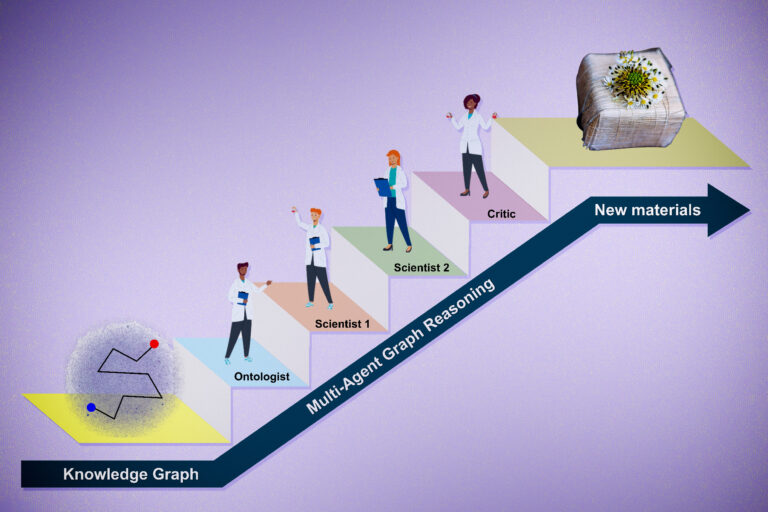

The framework, which the researchers call SciAgents, is comprised of multiple AI agents, each with specific functionality and access to data, and leverages “graph inference” techniques to uses knowledge graphs to organize and define relationships between diverse scientific concepts. Multi-agent approaches mimic the way biological systems are organized as groups of basic building blocks. Buehler points out that this “divide and conquer” principle is a prominent paradigm in biology at many levels, from matter to insect swarms to civilization. In all of these examples, the sum of intelligence is much greater than the sum of individual abilities.

“By using multiple AI agents, we are trying to simulate the process by which a community of scientists makes discoveries,” Buehler said. “At MIT, we do that by having a lot of people from different backgrounds working together and bumping into each other in coffee shops and in MIT’s Infinite Corridor. But it’s very serendipitous and slow. Our quest is to simulate the process of discovery by exploring whether AI systems can be creative and make discoveries.”

Automate good ideas

As recent developments have shown, large-scale language models (LLMs) have shown great ability to answer questions, summarize information, and perform simple tasks. However, when it comes to creating new ideas from scratch, there are limits. MIT researchers hoped to design a system that would allow AI models to perform a more sophisticated, multi-step process that would allow them to not only recall information learned during training, but also extrapolate and create new knowledge. Ta.

The basis of their approach is an ontological knowledge graph that organizes diverse scientific concepts and creates connections between them. To create the graph, researchers feed a set of scientific papers into a generative AI model. In previous research, Buehler used a field of mathematics known as category theory to understand scientific concepts as graphs that are rooted in defining relationships between components in a way that can be analyzed with other models through a process called graph inference. abstractions that AI models can develop. . This focuses AI models on developing more principled ways to understand concepts. It also allows for better generalization across domains.

“This is critical for creating science-focused AI models, as scientific theories are typically rooted in generalizable principles rather than mere knowledge recall,” Bühler says. . “By focusing AI models on ‘thinking’ in this way, we can move beyond traditional methods and explore more creative uses of AI.”

In the latest paper, the researchers used about 1,000 scientific studies on biological materials, but Buehler says the knowledge graph can generate far more or fewer research papers in any field. That’s what it means.

After establishing the graph, the researchers developed an AI system for scientific discovery with multiple models specialized to play specific roles within the system. Most of the components are built from OpenAI’s ChatGPT-4 series models and leverage a technique known as in-context learning. In this technique, the prompts provide contextual information about the model’s role in the system while allowing it to learn from the data provided.

Individual agents within the framework interact to jointly solve complex problems that cannot be done alone. The first task given to them is to generate a research hypothesis. LLM interactions are initiated after subgraphs are defined from the knowledge graph. This is done either randomly or by manually entering a set of keywords described in the paper.

In this framework, a language model the researchers dubbed an “ontologist” is tasked with defining scientific terms in a paper and exploring connections between them to flesh out a knowledge graph. A model named “Scientist 1” then creates a research proposal based on factors such as the ability to discover unexpected properties and novelty. The proposal includes a discussion of the potential findings, impact of the study, and speculation about the underlying mechanism of action. The “Scientist 2” model expands on this idea, proposing specific experimental and simulation approaches, and adding other improvements. Finally, the “Critic” model highlights its strengths and weaknesses and suggests further improvements.

“It’s important to build a team of experts who don’t all think the same way,” Buehler says. “They have to think differently and have different abilities. Critic agents are intentionally programmed to criticize other agents, so not everyone will agree and say it’s a great idea. No. Suppose you have an agent who says, “Here’s a weakness. Could you explain it in more detail?” Therefore, the output will be very different from a single model. ”

Other agents in the system can search existing literature, which allows the system to not only assess feasibility, but also create and evaluate the novelty of each idea.

Strengthen your system

To test their approach, Buehler and Ghafarollahi created a knowledge graph based on the words “silk” and “energy-intensive.” Using this framework, the ‘Scientist 1’ model proposed integrating silk and dandelion-based dyes to create biomaterials with enhanced optical and mechanical properties. The model predicted that the material would be significantly stronger than traditional silk materials and require less energy to process.

Scientist 2 then makes suggestions, such as using specific molecular dynamics simulation tools to investigate how the proposed material interacts, and suggests that an excellent application for this material would be biologically derived. I added that it was glue. The Critic model then highlighted several advantages of the proposed material and areas for improvement such as scalability, long-term stability, and environmental impact due to solvent usage. To address these concerns, critics suggested conducting pilot studies for process validation and performing rigorous analyzes of material durability.

The researchers also performed other experiments using randomly selected keywords to create more efficient biomimetic microfluidic chips, enhanced mechanical properties of collagen-based scaffolds, and bioelectronic devices. have generated various original hypotheses regarding the interaction between graphene and amyloid fibrils.

“The system was able to come up with these new and rigorous ideas based on the paths from the knowledge graph,” Ghafarollahi said. “From a novelty and applicability perspective, this material seemed robust and novel. Future research will generate thousands and tens of thousands of new research ideas, classify them, and We will strive to better understand how the materials are produced and how they can be further improved.”

In the future, the researchers hope to incorporate new tools into the framework to obtain information and run simulations. It also allows you to easily replace the basic model in your framework with a more advanced model, allowing your system to adapt to the latest innovations in AI.

“Due to the way these agents interact, improvements in one model, even small ones, can have a large impact on the behavior and output of the entire system,” Buehler says.

Since publishing a preprint detailing their approach as open source, the researchers have helped hundreds of people interested in using the framework in a variety of scientific fields, as well as areas such as finance and cybersecurity. I’ve been contacted by a number of people.

“There are a lot of things you can do without going to the lab,” Buehler says. “Essentially, you want to go to the lab at the end of the process. Labs are expensive and take a long time, so you dig deep into the best ideas, develop the best hypotheses, and develop new We need a system that can accurately predict behavior. Our vision is to make this easy to use and use the app to incorporate other ideas and drag datasets to really challenge the model and discover new things. It’s about being able to do things like that.”