Refresh

Come back tomorrow for Day 6

Today’s announcement was short and sweet but pretty big for OpenAI as its integration with Siri and Apple goes live.

Will we Sam Altman tomorrow? Probably not.

We don’t know what we will see tomorrow but we’ve yet to have an update to Advanced Voice and it is likely it will get a new vision capability.

There are seven more days of announcements and Tom’s Guide will be covering every one of them.

If you’re interested check back with us for coverage of the announcements and analysis on what these new OpenAI tools and products mean for you.

iOS 18.2 is live

Apple Intelligence is getting a major boost with today’s release of iOS 18.2.

Beyond ChatGPT there’s Genmoji, Image Playground and Visual Intelligence.

For more information, check out our post about everything coming to your iPhone 16 or iPhone 15 Pro model.

Additionally, we have a guide on how to use ChatGPT in iOS 18.2 and how to access it now.

Everything ChatGPT can do with Apple Intelligence

Day 5 of OpenAI’s 12 Days of announcements was timed to pair up with the release of iOS 18.2 which brings a number of Apple Intelligence features to the iPhone 16 lineup and iPhone 15 Pro models.

With the integration of ChatGPT, you can now use the OpenAI model with both Macs and iPhones. As an example you can open a document on your Mac with Siri and use ChatGPT to ask questions about it.

This all brings integration with additional ChatGPT models like the new o1 reasoning model, Canvas and DALL-E.

Today, Altman and crew showed off the integration using the o1 model to write code and visual the code after it was done.

Sam Altman: “We are thrilled about this release. We hope you love it and are grateful to our friends at Apple.”

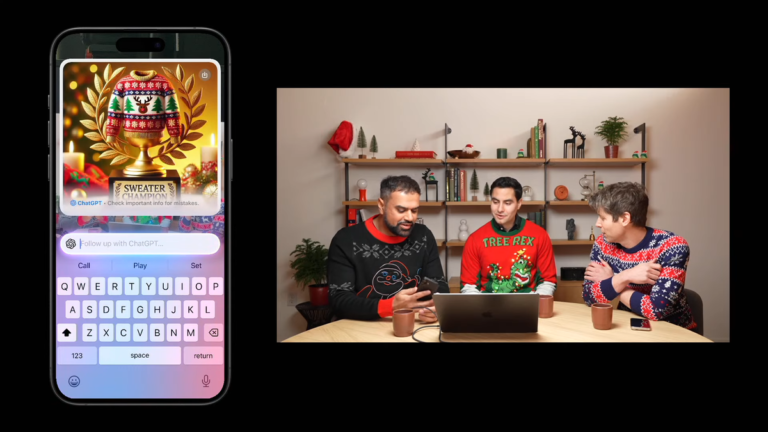

Additionally, the livestream showed off Visual Intelligence integration with Siri using ChatGPT in the prompt bar. They were able to have Siri and ChatGPT judge a photo for the best Christmas sweater with Altman winning (rigged?).

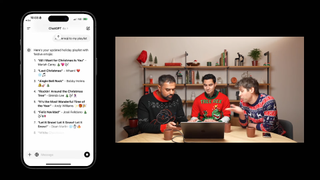

You can ask Siri directly to use ChatGPT for things like organizing tasks or create playlists.

There is a ChatGPT app available for Mac and iPhone that can be opened from Siri where you can get more in depth with work, especially with the new Canvas feature that lets you add emojis or edit content made with ChatGPT.

It’s a lot and appears to make Siri better but we’ll know more as we dive deeper into iOS 18.2 and put this integration to the test.

ChatGPT makes Apple Intelligence better

Apple Intelligence is better than many people give it credit because it is an advanced language model running entirely on a device. However there are limitations, so the deep integration between ChatGPT and Siri fills in the gaps.

During the 12 Days of OpenAI they were able to demonstrate its use not just on the phone but on the Mac, where you can open Siri and ask it to review an open document using ChatGPT, even asking questions about the document.

You can get information within the Siri interface or send it to ChatGPT for more information or to use other OpenAI tools like o1 reasoning model, Canvas or DALL-E.

In one example they had it review the o1 model card, asked it questions about why it is good for coding and then sent it to the ChatGPT desktop app to write code to visualize the coding capabilities.

Sam Altman: “We are thrilled about this release. We hope you love it and are grateful to our friends at Apple.”

Using Visual Intelligence with ChatGPT

One thing that I hadn’t tried before was using Visual Intelligence with Siri and ChatGPT. The demo shows the Siri interface but with a ChatGPT icon in the prompt bar.

During the demo they took a photo of the three hosts and asked it to demonstrate using Visual Intelligence to judge their Christmas jumpers. Sam Altman won.

Easily switch from Siri to ChatGPT

In the demo they show how you can ask Siri to use ChatGPT automatically for things like organizing a Christmas project list, but also in creating a music playlist.

Once it opens the preview in Siri you can click to open the full version in the ChatGPT app where you can customize the playlist and even add emojis in Canvas (which was announced yesterday).

Sam Altman hinted at a potential future release though — saying “faster image gen” is coming in the future, as they were waiting for it to create an album art cover.

All about ChatGPT in Apple Intelligence

Christmas sweaters all around, but the announcement isn’t really anything we don’t already know from Apple.

Today seems to be all about making ChatGPT easier to use. They are talking about the integration with ChatGPT into Apple Intelligence and Siri.

We have a lot more detail on this through our iOS 18.2 live blog. I’ve been using this in the developer beta for a while and Siri barely does anything for itself anymore, it just sends it all to ChatGPT.

Day 5 is LIVE

Watch On

OpenAI’s Day 5 live stream is … live and according to the post on X it is about Apple Intelligence. We have no clues beyond “ChatGPT x Apple Intelligence.” We’ll know soon.

We do know Sam Altman is back which usually means a bigger announcement. You can follow our coverage of Apple Intelligence in the iOS 18.2 live blog.

Advanced Voice Mode with Vision could be coming today

Rumors are swirling that OpenAI might soon release an Advanced Voice Mode with Vision, potentially revolutionizing how we interact with AI even more. If true, this upgrade could combine two of ChatGPT’s most powerful features—voice interaction and visual understanding—into one seamless experience.

Imagine speaking to ChatGPT not just to ask questions or give commands but to interact with it about the physical or virtual world around you. Advanced Voice Mode with Vision could enable real-time conversations where you show the AI an object or scene, and it provides detailed insights.

For example, you might hold up a book and ask ChatGPT to identify it, summarize its plot, or suggest similar reads. Cooking could become an entirely new experience as you show your pantry to the AI and ask what recipes you can make. Beyond this, professionals could use the feature to analyze diagrams, troubleshoot physical devices, or even assist with creative tasks like art critique.

Integrating voice and vision would make AI more intuitive and human-like, allowing for richer, more dynamic interactions. While current voice and vision features are already impressive, their combination could enhance accessibility, efficiency, and creativity in everyday applications.

If OpenAI does release this feature, it could set a new benchmark for multimodal AI, making interactions as natural and immersive as having a conversation with a tech-savvy friend.

Potential for an OpenAI privacy update announcement

Rumors about what will be announced on Day 5 are swirling, so it’s anyone’s guess. I have a few thoughts, and while maybe not as exciting as some of the news in the past days, we might see potential for an update with OpenAI’s policy and safety initiatives.

OpenAI is committed to safety and privacy, so it would not be a surprise if Sam and his team showcased something in that area during their briefings. Given the growing concerns about AI safety and ethics, Altman might introduce new policies or frameworks to address these issues, ensuring responsible AI development.

OpenAI has undergone internal restructuring, including reassigning key personnel. Altman could provide updates on leadership roles or strategic shifts within the company.

Altman has recently engaged in political and public service roles, such as joining the transition team of San Francisco’s new mayor. He might discuss initiatives at the intersection of AI and public policy.

Without specific details from Altman’s recent communications, these are speculative scenarios based on OpenAI’s trajectory and Altman’s interests. For precise information, it’s advisable to follow official channels for the forthcoming announcement.

Sora still having sign-up problems

OpenAI released Sora on Day 3 as a standalone product. Available at sora.com you can use your ChatGPT account, but there is a separate ‘onboarding’ process that requires you to give them your date of birth and agree to the terms of use.

The process is fairly simple and if you’ve got a ChatGPT Plus account you’ll get 50 generations a month with Sora as part of the plan — but demand is so high that OpenAI has been forced to close down the sign-up process for now.

I’m told they are working to add capacity and that it will be back sporadically until then. This might be more annoying to those people paying $200 a month for ChatGPT Pro purely for the purpose of getting 500 videos per month, only to find they can’t get Sora yet.

As I’m in the UK and it isn’t going to be available for me until next year, my sympathy is less than enthusiastic for them — but I do understand the frustration. However, if you can’t get in I’ve compiled a list of Sora alternatives worth trying .

For those that do have access, it is either the best video generator ever made, or occasionally perfect but mostly similar to what is already available.

Canvas is free for all users regardless of subscription tier

Canvas takes AI editing to new heights. Highlight any text, and you can ask ChatGPT to refine it, change the tone, or expand on an idea. I tested this with a short bio, asking Canvas to edit it from the perspective of an English professor. The result? Thoughtful feedback and suggestions that felt like I was working with a human editor. This perspective-shifting feature is invaluable for tailoring your writing to different audiences.

This brings up questions about other writing platforms such as Sudowrite, which I have used and enjoyed. Now that Canvas offers feedback in various perspectives from english professors to a best friend, writers can get the answers they need without leaving the platform.

You can adjust the text’s tone, audience, and length—all from one place. The “final polish” button ensures your writing is clear, concise, and professional. Unlike other editors that impose corrections, Canvas gives you control, letting you decide which changes to implement.

Canvas combines the best of Google Docs, Grammarly, and coding environments like VSCode but integrates AI more deeply than any of them. Instead of being an add-on, AI is the foundation. It’s not just about viewing AI outputs—it’s about collaborating with AI to refine your work in real time.

Unlike competitors such as Anthropic’s Claude Artifacts or Google’s Gemini tools, Canvas provides real-time editing within the workspace, making it feel like a true partner in the creative process.

OpenAI Canvas is like having an editor with me as a write

After yesterday’s announcement and release of OpenAI Canvas, it’s safe to say that users are excited that the writing and editing tool is finally out of beta. As someone who has regularly used Google docs for my writing and to keep track of edits, after I went hands-on with Canvas, I’m finally moving on.

OpenAI’s Canvas is here, and it’s redefining what it means to collaborate with AI. Canvas transforms ChatGPT from a helpful chatbot into a powerful writing and coding editor. With its AI-first approach, Canvas offers tools for drafting, refining, and even debugging in real time, making it a game-changer for productivity.

Google docs is great for collaborating with others, but as a writer who usually works alone before sending to an editor, I appreciate that I can collaborate with AI on Canvas and make real-time edits based on what the chatbot suggests. From my experience testing Canvas yesterday, it’s clear that the chatbot does an excellent job offering edits that will make my work clearer and more concise.

Canvas allows users to highlight text and ask ChatGPT for specific edits, such as rephrasing or expanding on ideas. Need to refine your tone for a professional audience or make your writing more concise? Canvas handles it seamlessly. I tested this by editing a bio blurb, and Canvas provided a polished, in-depth version perfect for LinkedIn or my personal website. This feature is especially handy for tailoring content to specific word counts or styles required by different platforms.

Unique tools like the “pop-out writing button” make Canvas even more versatile. Adjust your target audience, tweak the text length, or even add emojis for emphasis. The “final polish” button ensures your work is clear, grammatically sound, and professional. Unlike Grammarly, which often auto-corrects in the background, Canvas puts you in control, making it feel more collaborative.

Canvas is a reimagining of how AI integrates with productivity. By making Canvas free and available to everyone, OpenAI has taken a significant step toward making AI-powered productivity tools accessible to all. For writers, developers, and creators of all kinds, Canvas represents the future of collaborative AI. Try it, and you might never look at Google Docs the same way again.

Tips for getting more out of o1

It might feel like the announcement of the full o1 model was months ago, but it was less than a week. On its own this would have been a major announcement from OpenAI but as part of its 12 Days extravaganza, it is a blip in a busy week.

However, o1 is an incredibly important model, bringing reasoning capabilities to ChatGPT that allow you to work through problems, code and plan in a way not possible with GPT-4o.

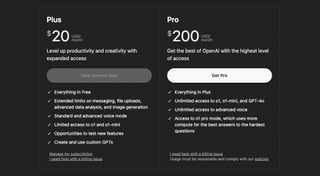

The bad news is, unless you are prepared to sign up for the $200 a month Pro plan, we mere mortals on the Plus plan will have to put up with a max limit of 50 messages a week.

We’ve pulled together some tips for getting the most out of o1 including picking your cases carefully and using it to brainstorm ideas.

Could we get a new GPT model today?

ChatGPT Team plan will offer “Limited preview of GPT-4.5” (not visible, yet) pic.twitter.com/zIVS4O7o5oDecember 5, 2024

Multiple people inside OpenAI have suggested that we might not get another GPT model, or at least it might not be called GPT-. So, that would suggest no GPT-4.5 or even GPT-5 is on the horizon — but that hasn’t stopped the rumor mill.

So, where do the rumors come from? Well, one user found GPT-4.5 buried in the ChatGPT source code and a UI leak saw a reference to ChatGPT ε (epsilon) which is the fifth letter of the Greek alphabet. OpenAI has not been shy at dropping hints like this in the past.

Tibor Blaho, who has shared accurate leaks int he past, discovered a hidden line in the ‘Upgrade your plan” view. It offered a “limited preview of GPT-4.5” as a Team plan perk. This has since been removed.

Whatever happens, we will find out for sure at 1pm ET.

No, you don’t need to pay $200 a month for ChatGPT

One of the most surprising announcements from the 12 Days of OpenAI event was the $200 per month ChatGPT Pro plan. At 10 times the price of ChatGPT Plus, it was clearly not meant for us mere mortal AI users — but why not?

With the Pro subscription, you get unlimited access to Advanced Voice, the full o1 model, unlimited GPT-4o access and the ability to use the new o1 Pro model.

You also get more compute power behind your queries but this, and o1 Pro, are only useful for incredibly complex tasks in the research space.

The biggest update came on Day 3 of the 12 Days of OpenAI when they announced ChatGPT Pro users would also get the extra Sora use. This also includes the ability to generate videos of people, something not available with ChatGPT Plus. But dedicated Sora plans are coming next year.

My recommendation, unless you’re a research scientist, or professional software developer working on particularly complex code, or have more money than you need and want to try it out for the sake of trying it out — stick with the $20 plan.

Hands on with ChatGPT Canvas

OpenAI just officially launched Canvas, and I couldn’t wait to try it. The latest from OpenAI brings a new level of interactivity and collaboration to ChatGPT. No longer in beta, Canvas is now available to all users, regardless of tier — for free — providing a powerful AI-first writing and coding editor built directly into ChatGPT.

The first thing I noticed was that Canvas transformed ChatGPT from a simple chat assistant into a collaborative partner for writing and coding projects. By opening a dedicated workspace, I was able to start a draft on the page or upload text.

From there, I could edit and refine text or code in real-time. Whether you’re brainstorming ideas, editing a report or debugging complex code, Canvas offers a seamless, AI-driven experience with a real-time AI editor.

Users can either start their project directly in Canvas or transition from a chat conversation by typing “use Canvas.” Since the beta preview, Canvas has added more features, offering flexibility that bridges the gap between conversational AI and traditional document editing tools, potentially offering the best of both worlds.

Whether you’re working on a personal essay, a detailed report or a coding project, Canvas turns AI into an active partner in your workflow. It’s elevated the way I outline, brainstorm and edit. Because Canvas integrates so seamlessly into ChatGPT, it is accessible and easy to use, empowering everyone from writers to developers. OpenAI making the feature free to everyone is just the icing on the AI cake.

You can read my full review of ChatGPT Canvas as we look ahead to what might come today. We may even get other ChatGPT upgrades (better image generation would be nice).

What can we expect on Day 5?

OpenAI has had several big announcements in the first 4 days of their 12 Days of AI extravaganza including o1 reasoning model, $200 for ChatGPT Pro, fine-tuned AI model training, Sora and most recently ChatGPT Canvas.

Through Dec. 20 there will be more, but some of them will be bigger than others. If you consider the ChatGPT Canvas update ‘relatively small’ then we could see something big tonight — rumors hint at GPT-4.5 but Altman has previously said no new GPT models.

We’ve yet to have an update to Advanced Voice and it is likely it will get a new vision capability before the end of this two week frenzy of AI news.