Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible and introduces new hardware, software, tools, and tools for GeForce RTX PC and NVIDIA RTX workstation users. Introducing acceleration.

As generative AI evolves and accelerates industries, a community of AI enthusiasts is experimenting with ways to integrate the powerful technology into common productivity workflows.

Applications that support community plugins allow users to explore how large-scale language models (LLMs) can enhance various workflows. Using a local inference server powered by the NVIDIA RTX-accelerated llama.cpp software library, users on RTX AI PCs can easily integrate local LLM.

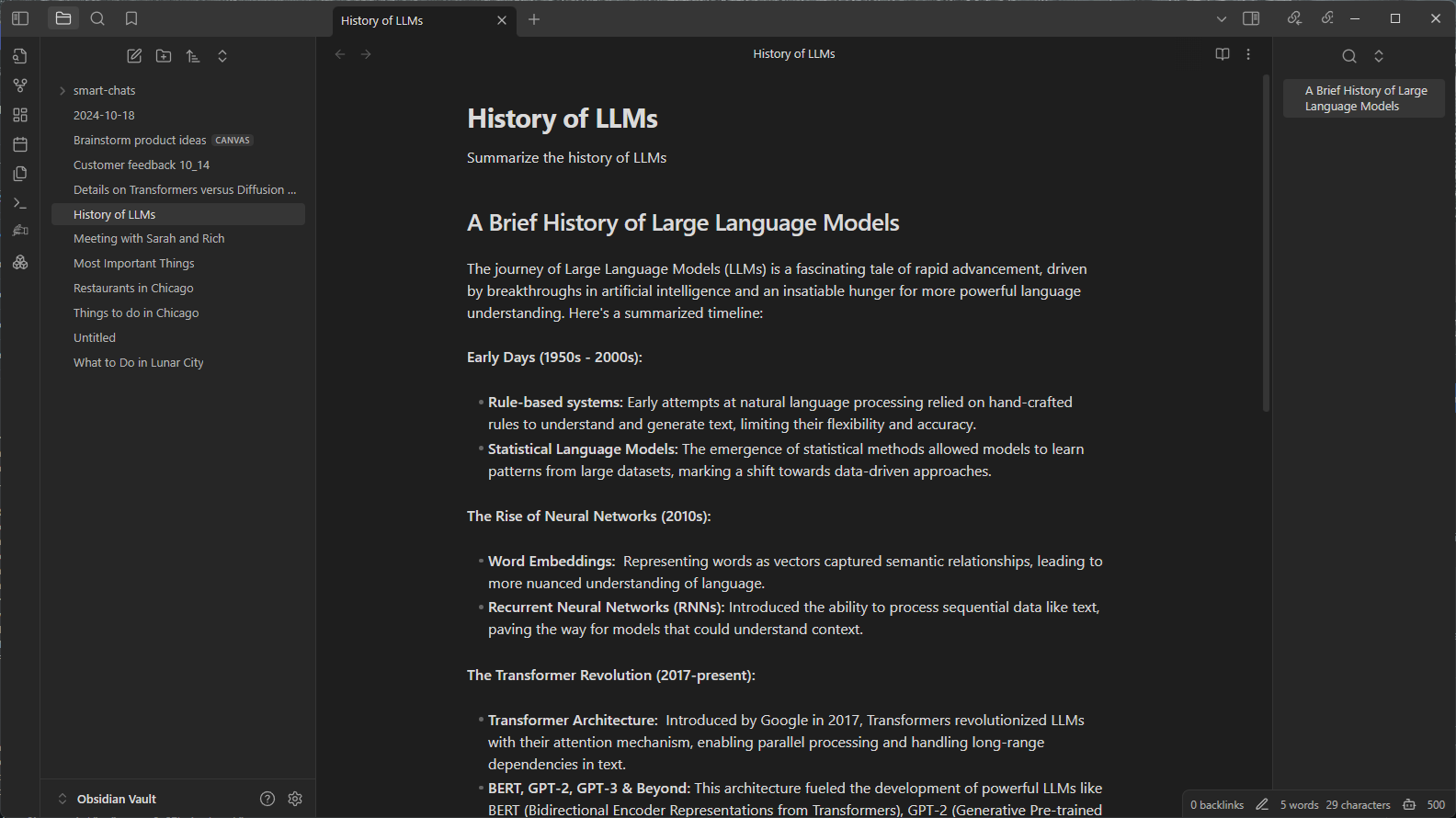

Last time, we talked about how users can leverage Leo AI in the Brave web browser to optimize their web browsing experience. Today we’ll be looking at Obsidian, a popular writing and note-taking application based on the Markdown markup language. This is useful for maintaining complex, linked records of multiple projects. The app supports community-developed plugins that provide additional functionality, including plugins that allow users to connect Obsidian to local inference servers such as Ollama or LM Studio.

To connect Obsidian to LM Studio, enable LM Studio’s local server functionality by clicking the Developer icon in the left panel, load the downloaded model, enable the CORS toggle, and click Start. Just do it. Make a note of the chat completion URL (by default “http://localhost:1234/v1/chat/completions”) from the “Developer” log console, as the plugin needs this information to connect. Masu.

Next, start Obsidian and open the Settings panel. Click Community Plugins and click Browse. There are several community plugins related to LLM, but two common options are Text Generator and Smart Connections.

Text Generator helps you generate content such as notes and summaries about your research topic into Obsidian Vault. Smart Connections can help you ask questions about the contents of the Obsidian Vault, including answers to obscure trivia questions saved years ago.

Each plugin has its own way of entering the LM server URL.

For Text Generator, open the settings, select “Custom” under “Provider Profile”, and paste the entire URL into the “Endpoint” field. For smart connections, configure settings after starting the plugin. In the settings panel on the right side of the interface, select Custom Local (OpenAI Format) as the model platform. Then enter the URL and model name (e.g. “gemma-2-27b-instruct”) that will be displayed in LM Studio in the respective fields.

Fill in the fields and the plugin will work. If users are interested in what’s happening on the local server side, the LM Studio user interface also displays logged activity.

Transform your workflow with the Obsidian AI plugin

Both the Text Generator and Smart Connections plugins use generation AI in fascinating ways.

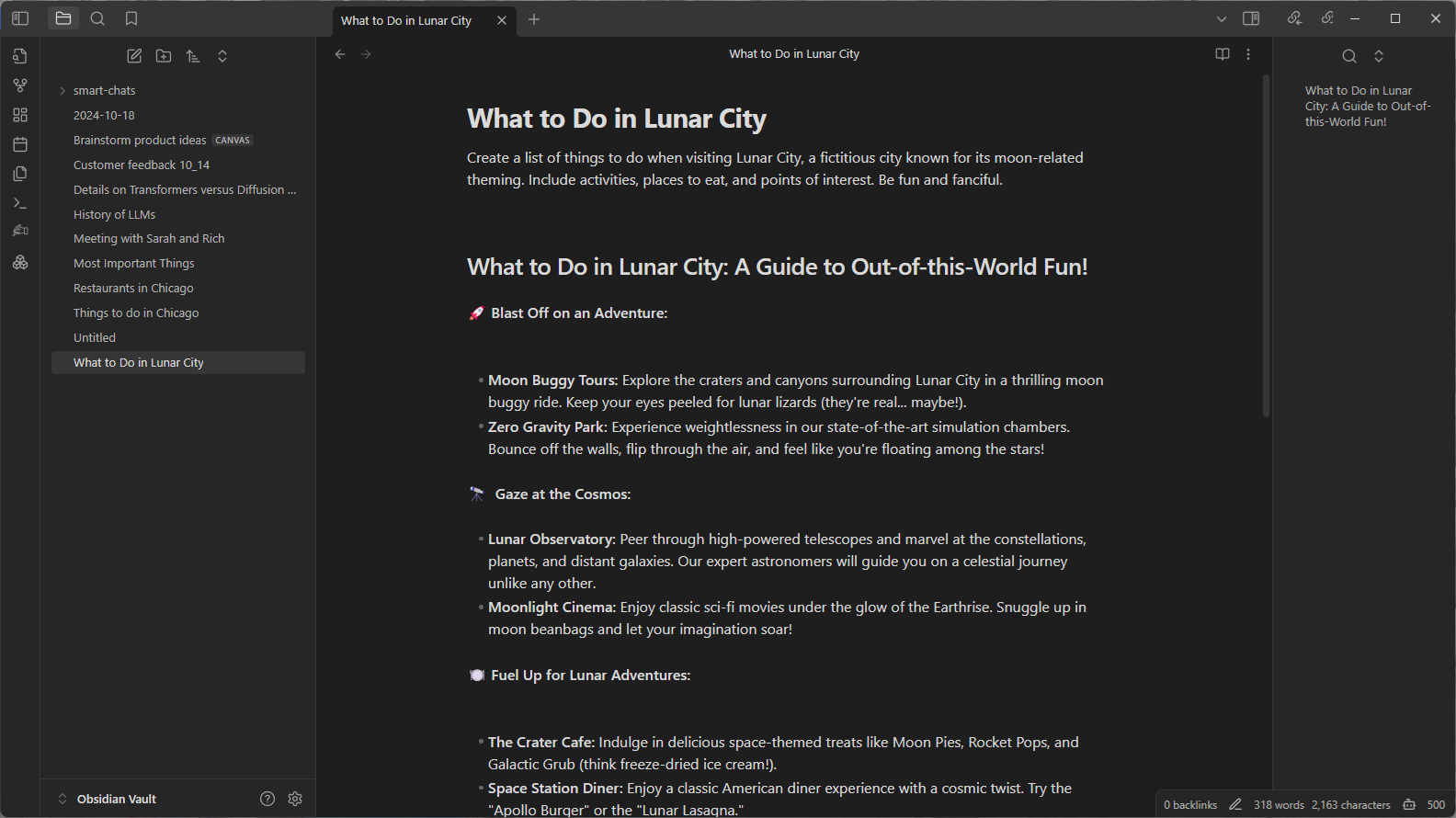

For example, let’s say a user is planning a vacation to a hypothetical lunar city and wants to brainstorm ideas about what to do there. The user starts a new note titled “What to do in a lunar city.” Since Lunar City is not a real place, the query sent to LLM must include some additional instructions to guide the response. Clicking on the Text Generator plugin icon will generate a list of activities that the model will do during the trip.

Obsidian asks LM Studio to generate a response through the Text Generator plugin, and LM Studio then runs the Gemma 2 27B model. Using the user’s computer’s RTX GPU acceleration, the model can quickly generate a to-do list.

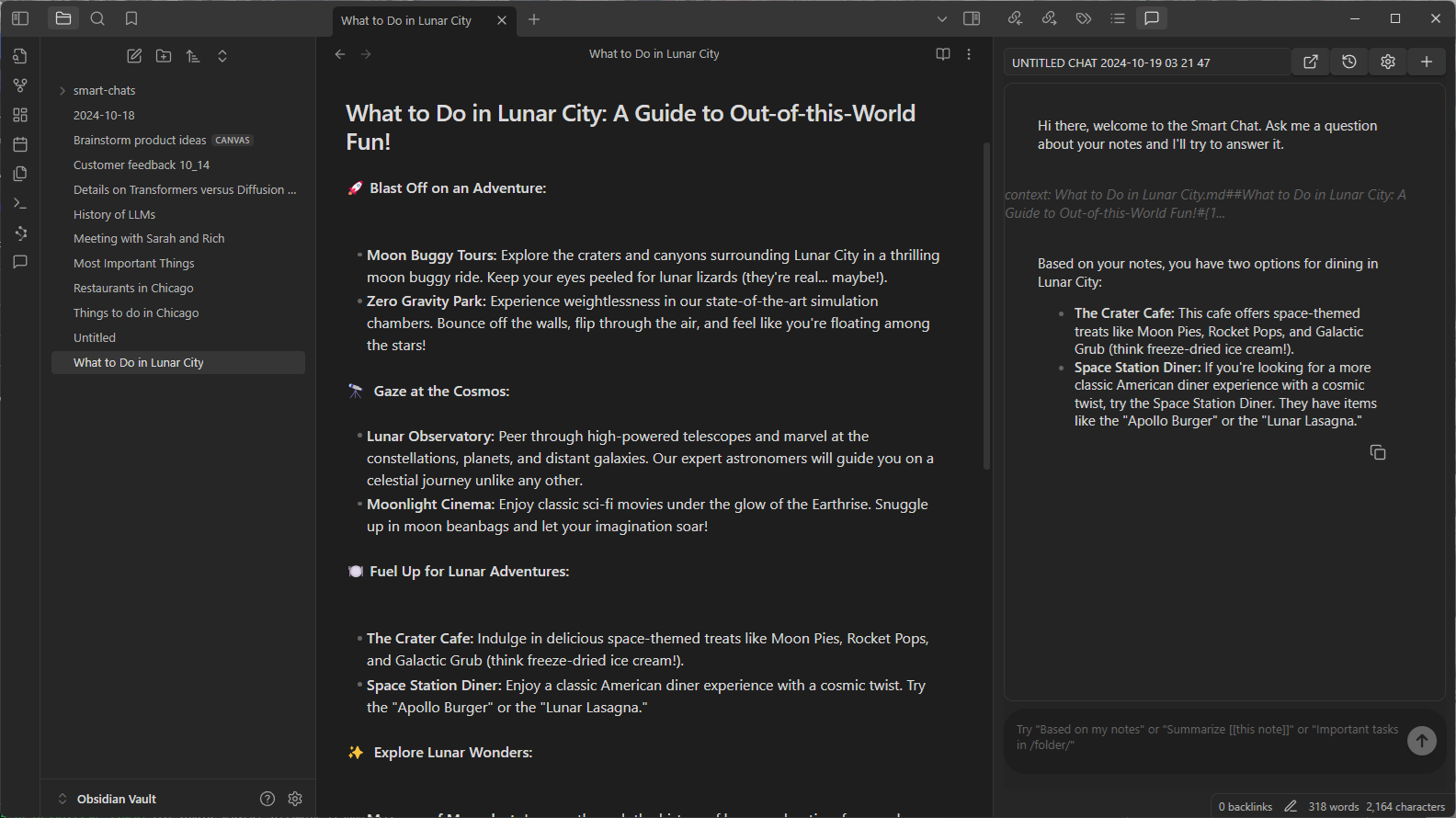

Or, many years from now, your friend is going to a lunar city and wants to know where to eat. Users may not remember the name of the place they ate, but check their notes (Obsidian term for a collection of notes) in their vault in case they wrote something down. You can.

Rather than manually reviewing all notes, users can use the smart connectivity plugin to ask questions about their note vault and other content. The plugin uses the same LM Studio server to respond to requests and provides relevant information found from the user’s notes to assist in the process. The plugin does this using a technique called search extension generation.

These are just fun examples, but after using these features for a while, users will begin to see real benefits and improvements in their daily productivity. Obsidian plugins are just two ways community developers and AI enthusiasts are leveraging AI to enhance their PC experience. .

NVIDIA GeForce RTX technology for Windows PCs enables developers to run thousands of open source models for integration into Windows apps.

Learn about the power of LLM, text generation, and smart connectivity by integrating Obsidian into your workflow, and try out the accelerated experiences available on RTX AI PC.

Generative AI is transforming gaming, video conferencing, and all kinds of interactive experiences. Subscribe to the AI Decoded newsletter to know what’s new and what’s next.